NOTE TO HEAD-FI MEMBERS (revised 3/28): Unfortunately, before I posted this article, the administrators at Head-Fi.org started censoring links to this blog. So if you had some trouble finding the results here, I’m sorry. The links were apparently not a problem when I was posting at Head-Fi on several other products and topics. The censorship only started after a paying Head-Fi sponsors (NuForce) publically complained about my review of their product. Head-Fi has gone so far as even deleting posts from other Head-Fi members that reference this blog.

BACKGROUND: NuForce responded to their uDAC-2 measuring poorly by saying it was designed to sound good even if several measurements are notably bad. So I came up with the best way I knew how to judge the NuForce purely on sound quality. I also thought it would be interesting to compare the $29 Behringer UCA202 with a high-end product like the Benchmark DAC1 Pre. The DACs were recorded playing real music under as realistic and similar conditions as possible. Anyone could download the recordings and compare them without knowing which was which—sort of like a “brown bag” wine tasting. Would people like the $3 wine better than the $30 wine? I thought it would be fun to find out!

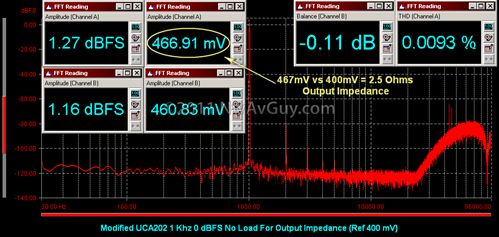

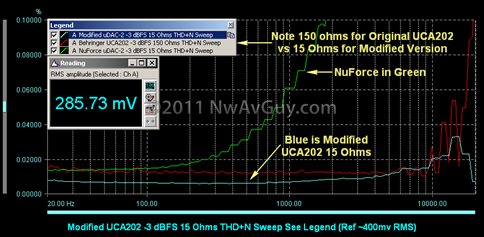

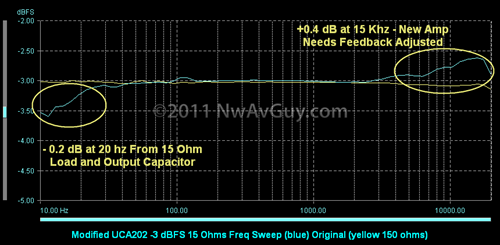

TEST METHODS: Not having conducted a public web-based listening test before, this was something of a learning experience for me. For the first round, to make the comparison as fair as possible, I used the line outputs of all three DACs . The second round of tests used the headphone outputs driving real headphones and included a modified version of the Behringer UCA202. The modifications were made using a few dollars worth of parts and improve the headphone output of the Behringer. The files were given names of US Presidents in the first test and common trees in the second. The original listening tests, and all the details, can be found here:

A NOTE ABOUT “MASKING” (revised 3/17): Some have questioned the validity of the tests because, as NuForce put it, the output of the DACs have been “re-digitized”. And some also argue the equipment used to play back the files may not be high enough quality. The concern is these could mask the differences between the DACs. In reality this should not be much of an issue using playback hardware with reasonable fidelity. Here’s why:

- These trials are only about the differences between the files, not the absolute accuracy. It’s like shopping online for a shirt. You might look at 3 different blue shirts at the same online retailer. Even if your computer display can’t convey every color and detail perfectly, you can still easily tell most of the differences between the shirts if they’re good pictures, all taken the same way, and your have at least a reasonably decent display. For example if your computer distorts the exact shade of blue, you should still be able to tell which of the 3 shirts is the darkest blue. The same is true in comparing the sound files. Even if your headphones might exaggerate the bass, you can still tell differences between the bass in the various files. This “difference effect” has been well documented in research.

- As to “re-digitized”, the Benchmark ADC1 used to convert the output of the DACs to a CD quality file is already better than most studio gear used to record the music we all listen to. Studio A/D converters typically cost about $100 – $400 per channel of conversion. The ADC1 is a reference-grade A/D with a $900 per channel price tag. It generally has better specifications than nearly all studio gear. If the less expensive studio gear is good to enough to capture the subtle difference between say a high-end Steinway and a high-end Yamaha grand piano, the ADC1 should also capture audible differences between DACs.

- Most recordings have already been through many more steps and kinds of digital processing yet are still very revealing of subtle differences. So adding one more relatively “pure” step isn’t going to make much difference. The recordings used in these tests are unusually pure and transparent.

PARTICIPANTS: This was a very informal survey. A total of 20 listeners picked their favorite (and sometimes least favorite) tracks. Many ranked them top to bottom. Some only participated in one of the tests and/or one of the songs. Some used ABX and some listened conventionally. More participated in the first (line out) trial than the second (headphone out) trial.

SCORING: Not everyone participated in every trial, some devices were offered in more trials than others, and different listeners provided different sorts of “votes”. Someone who’s better with statistical analysis than I am is reviewing the raw data. But, for now, to summarize the results here’s my attempt at a rough analysis:

- Top choices scored 2 points

- Second (“Runner Up”) choices scored 1 point

- Least favorite (worst) scored –1 point (several indicated only their least favorite and not a favorite)

- The results are summarized by category (line output vs headphone output)

RESULTS (corrected 2 minor errors 3/16): Here are the total points scored for each downloaded file ranked from most favored (highest score) to least favored using the above scoring method:

| Taft - Benchmark Line Out Brick House | 9 |

| Harrison - NuForce Line Out Brick House | 8 |

| Juniper - Benchmark CX300 Brick House | 7 |

| Wilson - Behringer Line Out Brick House | 6 |

| Jefferson - Reference CD Just Dance | 4 |

| Lincoln - Benchmark Line Out Just Dance | 3 |

| Oak - Behringer CX300 Brick House | 2 |

| Spruce - Mod UCA202 CX300 Brick House | 2 |

| Acorn - Benchmark CX300 Tis of Thee | 2 |

| Maple - Mod UCA202 CX300 Tis of Thee | 3 |

| Monroe - Reference CD Brick House (!) | 1 |

| Hawthorn - NuForce CX300 Tis of Thee | 1 |

| Fig - Behringer CX300 Tis of Thee | 1 |

| Jackson - NuForce Line Out Just Dance | 0 |

| Cypress - NuForce CX300 Brick House | 0 |

| Adams - Behringer Line Out Just Dance | -2 |

| Pine - NuForce UE SF5 Tis of Thee | -4 |

| Total Benchmark Line Out | 12 |

| Total Benchmark Headphone Out | 9 |

| Total NuForce Line Out | 8 |

| Total Behringer Line Out | 4 |

| Total Modified Behringer Headphone Out | 5 |

| Total Behringer Headphone Out | 3 |

| Total NuForce Headphone Out CX300 | 1 |

| Total NuForce Headphone Out Ultimate Ears SF5 | -4 |

COMMENTS ON RESULTS (edited 3/16): Even with the small sample size a few things were fairly clear:

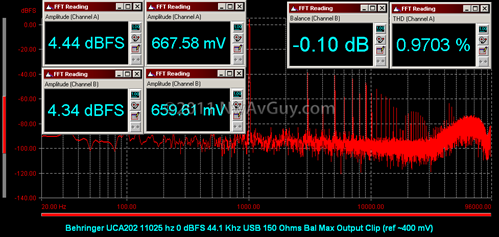

- The NuForce headphone output with the Ultimate Ears SuperFi 5 Pro headphones was an obvious “fail”. Several expressed a clear dislike of “Pine” and nobody favored it. I think this is due to the large frequency response variations caused by the relatively high output impedance of the NuForce uDAC-2 with these headphones. Several commented the high frequencies were rolled off or “dull”. This consistent with what the measurements would predict.

- The reference CD tracks didn’t score as well as expected. For “Monroe” it’s likely because the reference track stood out as being different. Early in the forums it was labeled “by far the worst”. And that public comment likely tainted others into also hearing it as the “worst”. Six people rated it as the worst of that group of tracks. I personally think this is a good example of subjective bias--the original piece of music was deemed the “worst”! The Just Dance reference faired much better with a positive score of 4 likely because it wasn’t labeled publically as being bad. And much fewer even bothered to vote on Just Dance so the score of 4 was close to “perfect”.

- In terms of total votes, the Benchmark stood out as a clear favorite. It was included mainly as a “reference” and not necessarily as fair competition to the other two, much cheaper, DACs. But, some might have expected all the DACs to sound roughly similar via the line outputs based on the measurements. The could be many reasons why this didn’t happen. The most likely is the same “peer bias” that caused a reference track to be rated poorly (see above). But it’s also possible the cheaper DACs do have audible problems.

- The NuForce did much better via the line outs than the headphone outputs. Given the output impedance problem and higher distortion of the headphone output, this is what the measurements would predict.

- Any channel balance error was corrected in all tests. So this removed the audible imbalance of the NuForce helping it score better.

- NuForce’s claim of “better sound” doesn’t seem to be true when using the headphones. It faired poorly even with the more common and “impedance friendly” Sennheiser CX300’s.

Trying to draw other conclusions is a bit more difficult, but some things worth noting:

- Nearly half the people couldn’t hear any differences at all.

- The Just Dance track had a lot of inherent distortion that made hearing differences difficult for most. So those results are likely less valid (and there are fewer of them).

- A number of votes were somewhat randomly distributed between all the middle scoring test files. This may suggest more guessing rather than clear preferences.

- The line outputs of the $29 Behringer didn’t do as well as its measurements would suggest. The NuForce and Benchmark were preferred. This could be “peer bias”.

- The differences among the individual files were more obvious via the headphone outputs rather than the line outputs. This is to be expected due to the impedance interaction.

- For those who argue tests like this mask differences, it’s interesting to note the test may not have masked any subtle advantages of the high-end Benchmark. This is despite the fact that few listened to the files on similarly high-end gear.

BOTTOM LINE: I think the most obvious thing is roughly half the participants couldn’t tell any difference and another group of the “middle scoring” results is almost random with no clear preferences. Comparing the headphone outputs, however, the differences seemed more obvious. And they were very obvious when using balanced armature headphones (the SuperFi’s) on the NuForce. It’s clear the frequency response variations created by the NuForce’s relatively high output impedance creates audible problems.

It’s apparent subjective opinions of differences are easily swayed by public comments. The reference track from the CD of Brick House was strongly disliked by six listeners. They heard something different and were easily swayed into thinking different was worse based on previous public comments. It just took one person to say something negative and several others followed. This is exactly the sort of subjective bias that affects the majority of online subjective reviews in forums. As further proof, others using ABX (which is blind) voted the same track their favorite.

There’s also significant evidence the NuForce uDAC-2 does not sound better—at least using 2 different types of headphones—as NuForce claims it does. But it seemed to do a respectable job via the line outputs—at least with the channel balance error removed.

Overall I think this has been an interesting experiment. I learned a lot about how to run (and not run!) a listening test. And there were some fairly clear results—some expected and some not. If there’s sufficient interest, I may try to build on what I’ve learned here and conduct future listening tests?

COMMENTS WELCOME: Please feel free to add comments to the end of this article on the results—especially if you participated. I’d also like to know how many are interested in future listening tests? They’re a fair amount of work to put together and only really valid if you get a reasonable number of votes. So it’s something I only want to do if there’s enough interest. Please feel free to make suggestions, etc?

TECH SECTION:

IMPROVING THE RESULTS: I realize this wasn’t the best run study. It was more an informal experiment than anything. I think a larger scale listening test, that had more uniformity, would be needed to verify some of the closer results. If I do this again, I’ll research better methods, and I also welcome input from others with experience in this area?

DIFFERENT LOADS: It’s been suggested it would be also useful to include high impedance headphones and, except for the complexity that adds to the mix, I agree that would be interesting. I chose low impedance headphones as they’re, by far, the most popular—especially for use with a portable entry level DAC.

PASSWORD: An encrypted 7-Zip file was included with the file descriptions to prevent me from cheating or changing anything after people’s votes. The password is:

CY&YUMN5cZ9x2X8BhNj2t

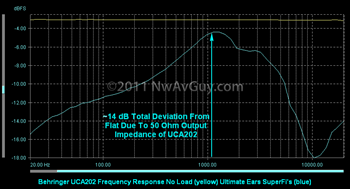

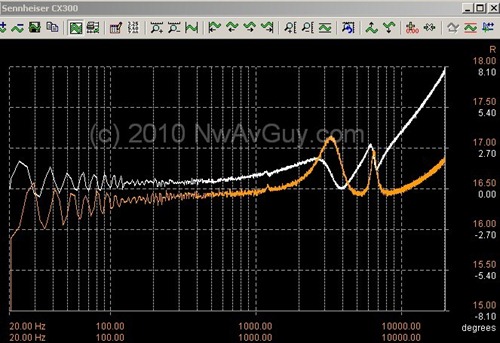

PINE EXPLAINED: The graph below, in blue, shows the frequency response of the NuForce uDAC-2 using the Ultimate Ears headphones used in the trial:

The 4+ dB of response variation seen above is caused by the relatively high output impedance of the uDAC-2 interacting with the SuperFi headphones (typical of balanced armature designs from many manufactures). For more on this see Headphone & Amp Impedance.

OTHER DETAILS: The other details of how the test was run can be found in the original articles: