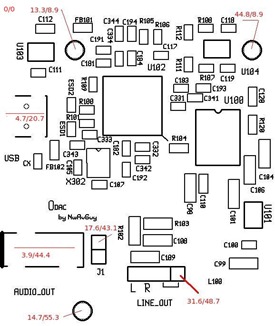

FIRST SOME UPDATES: This is a promising sign. I’ve managed to publish another article in less than a week and it’s a big one! Since last weekend’s April Fool’s article we’ve managed to finalize the ODAC design and it’s off to production. The best case estimate for availability is mid-May assuming all goes well. I plan to publish an article with the full measurements in the next few weeks. For now, I need a temporary break from the ODAC. (photo: BBC Two)

FIRST SOME UPDATES: This is a promising sign. I’ve managed to publish another article in less than a week and it’s a big one! Since last weekend’s April Fool’s article we’ve managed to finalize the ODAC design and it’s off to production. The best case estimate for availability is mid-May assuming all goes well. I plan to publish an article with the full measurements in the next few weeks. For now, I need a temporary break from the ODAC. (photo: BBC Two)

WHAT WE HEAR: The recent review of the O2 Amplifier at Headfonia has generated questions about why the reviewer heard what they heard. For example, a few have asked if the O2 is genuinely a poor match for the Sennheiser HD650 as the reviewer claims. How we do we determine if that’s true and, if the review is wrong, what happened? Others have asked if “colored” audio gear can sound better. This article tries to explore what’s often wrong with typical listening comparisons, subjective reviews and “colored” amplifiers.

THE SPECIFIC COMMENTS IN QUESTION: I’m not trying to place unique blame on Headfonia, or the authors, it’s just useful to have a concrete example. The Headfonia review is a timely example. The authors (Lieven and Mike) wrote:

“I really don’t find the Senns [HD650] to pair well with the O2”. He also said the O2 (presumably with the HD650) was: “ …missing some punch and impact. But the bass isn’t the only thing bothering me actually, there also is something bothering me with the mids . They sound a bit thin to me to be honest, I just feel like I am missing out on something using the O2. It isn’t bad sounding, and I’m sure lot’s of people love it, but I’m not one of them, I’m missing something to enjoy what I’m hearing.”

HUMAN PERCEPTION & SCIENCE COLLIDE: Just because the earth seems flat from where you’re standing doesn’t make it true. Our senses don’t always provide us with the real truth. This article is mainly about how all of us, even audio reviewers, perceive things and why.

TWO BUCK CHUCK (part 2): Robin Goldstein conducted over 6000 wine tastings with over 500 people including many wine experts who work in the industry. He discovered when people know the prices of the wines they clearly prefer more expensive wines. But when the the same wines are served “blind” (anonymously), the votes reverse and they express a strong preference for the least expensive wines.

TWO BUCK CHUCK (part 2): Robin Goldstein conducted over 6000 wine tastings with over 500 people including many wine experts who work in the industry. He discovered when people know the prices of the wines they clearly prefer more expensive wines. But when the the same wines are served “blind” (anonymously), the votes reverse and they express a strong preference for the least expensive wines.

FAKE RESTAURANTS & BUG SPRAY: Goldstein exposed one of the most prestigious sources of wine ratings in the world, Wine Spectator, as arguably having their awards up for sale. He created a fictitious restaurant with a wine list featuring poorly reviewed wines with descriptions like “bug spray” and “barnyard”. Then, after paying the appropriate fee, his fake restaurant managed to score a Wine Spectator Award of Excellence. Goldstein argues organizations like Wine Spectator do not provide the sort of unbiased advice many anticipate. He also concluded their ratings have a heavy commercial and financial component the public is largely unaware of. It’s alarmingly analogous to high-end audio including the questionable awards. For those into podcasts check out the NPR story on Goldstein and wine myths . He’s also written a book on the subject called The Wine Trials. (photo: heatherontravels)

TASTE WRONG: How can the same people, taste the same wines in two different tastings, and rate them completely differently? The is our senses are not nearly as reliable as we think. In this case what’s known as expectation bias caused the wine tasters to perceive the wines differently. They expected the more expensive wines to taste better so their brains filtered their perception of taste to help deliver the expectation. But when they didn’t know anything about the wines, they couldn’t have any expectations, hence the bias was removed. I’ll explain more shortly but this is also critical to what we hear.

SEE WRONG: Studies show our vision is easily fooled. A video linked in the Tech Section contains a classroom experiment where students witness what they believe is a real a crime. They’re shown pictures and asked to identify the suspect and many are so sure they even would testify in court. But, alarmingly, there’s no consensus in the photos they choose. In the end, none of them are correct! Their brains filtered out too many details of the thief. When shown pictures, each of their brains helpfully invented different missing details to match one of the photos. We do the same thing with audio (see the Tech Section).

HEAR WRONG: Just like with taste and vision, our hearing is heavily and involuntarily filtered. Only around 0.001% of what we hear makes it to our conscious awareness. This is hardwired into us for survival. We would go crazy from sensory overload if this filtering didn’t take place 24/7. Studies show you can play the identical track, on identical equipment, two different times, and the odds are good people will hear significant differences if they’re expecting a difference. This is called expectation bias and it’s the brain “helpfully” filtering your hearing to match your expectations—just like wine tasting. I reference the auditory work of James Johnston and more in the Tech Section. (photo: JustinStolle CC)

HEAR WRONG: Just like with taste and vision, our hearing is heavily and involuntarily filtered. Only around 0.001% of what we hear makes it to our conscious awareness. This is hardwired into us for survival. We would go crazy from sensory overload if this filtering didn’t take place 24/7. Studies show you can play the identical track, on identical equipment, two different times, and the odds are good people will hear significant differences if they’re expecting a difference. This is called expectation bias and it’s the brain “helpfully” filtering your hearing to match your expectations—just like wine tasting. I reference the auditory work of James Johnston and more in the Tech Section. (photo: JustinStolle CC)

THINK YOU’RE EXEMPT FROM HEARING BIAS? People who have made a career of studying the human auditory system have yet to encounter anyone who can overcome their sensory bias. If you have any doubt I challenge you to watch this video and try to overcome your listening bias. If you can’t reliably do it here with a single syllable, even when you know the exact problem, it’s safe to say you don’t have a chance with the much greater complexity of music and a wide range of expectations:

- McGurk Effect Video – A 3 minute BBC non-technical BBC video that allows anyone to experience how our brains involuntarily filter what we hear based on other information. (courtesy BBC TWO).

SKILLED LISTENING: You can learn what to listen for and hear more subtle flaws. Studies have shown show recording engineers are especially good at it—even better than hardcore audiophiles and musicians. Recording engineers make their living listening for that one clipped note. But they’re still not exempt from expectation bias.

RECORDING ENGINEERS FAIL: Ethan Winer, something of a recording studio expert, points out even recording engineers get caught making adjustments to a mix, and hearing the desired result, even when the controls were not even active. Nothing changed with the sound, but their brains deceived them into hearing the expected changes. And in blind listening tests, just like everyone else, they hear differences that don’t exist if they’re expecting differences. (photo by; RachelleDraven CC)

RECORDING ENGINEERS FAIL: Ethan Winer, something of a recording studio expert, points out even recording engineers get caught making adjustments to a mix, and hearing the desired result, even when the controls were not even active. Nothing changed with the sound, but their brains deceived them into hearing the expected changes. And in blind listening tests, just like everyone else, they hear differences that don’t exist if they’re expecting differences. (photo by; RachelleDraven CC)

THE TIME FACTOR: Our brains are like leaky sieves when it comes to auditory details. We can’t possibly remember the massive amount of data contained in even one song (roughly equivalent to one million words!). So, here again, our brains out of necessity heavily filter what we remember. The students in the sight example above thought they had accurate memories of the thief but they turned out to be very wrong. The science shows our audio memory starts to degrade after just 0.2 seconds. So if switching from Gear A to Gear B requires more than a fraction of a second you’re losing more information. The longer the gap, the more you forget and the more bias distorts what you do remember.

WHAT WE HEAR: Neurologists, brain experts, hearing experts, and audio experts, all agree the human hearing system, by necessity, discards around 99.99% of what arrives at our ears. Our “reptile brain” is actively involved determining what gets discarded. For example when you’re listening to someone at a noisy restaurant, the brain does its best to deliver just their voice. And, multiple studies demonstrate, when you’re listening to audio gear the brain also does its best to filter your hearing in the way it thinks you most want. If you’re expecting Gear A to sound different from Gear B the brain filters each differently so you indeed hear a difference even when there isn’t one. This auditory issue has many names. I generally call it “Subjective Bias” but it’s what’s behind “Expectation Bias”, “The Placebo Effect”, and “Confirmation Bias”. I highly recommend the book Brain Rules by John Medina and there are more resources in the Tech Section.

BACK TO HEADFONIA’S REVIEW: Knowing the O2 measures well creates an unavoidable bias that it might be too “sterile” which implies, as Lieven said, it could be “missing something” or sound “thin”. His brain involuntarily, when he knows he’s listening to the O2, would filter his hearing to deliver the expected “sterile” sound. Someone could set up a blind test between the O2 and a measurably transparent amp Lieven really likes, and he would no longer be able to tell the amps apart because his brain wouldn’t know what to expect. Conversely, you could set up a test where Lieven compares the O2 in a sighted test to a more favored amp but in reality he’s always listening to the O2. Because he’s expecting to hear differences he would--even though he’s really listening to the same amp. He would hear what he expects from each amp. This isn’t unique to Lieven or Headfonia. We all suffer from this bias.

BACK TO HEADFONIA’S REVIEW: Knowing the O2 measures well creates an unavoidable bias that it might be too “sterile” which implies, as Lieven said, it could be “missing something” or sound “thin”. His brain involuntarily, when he knows he’s listening to the O2, would filter his hearing to deliver the expected “sterile” sound. Someone could set up a blind test between the O2 and a measurably transparent amp Lieven really likes, and he would no longer be able to tell the amps apart because his brain wouldn’t know what to expect. Conversely, you could set up a test where Lieven compares the O2 in a sighted test to a more favored amp but in reality he’s always listening to the O2. Because he’s expecting to hear differences he would--even though he’s really listening to the same amp. He would hear what he expects from each amp. This isn’t unique to Lieven or Headfonia. We all suffer from this bias.

MEASUREMENTS PROVIDE REAL ANSWERS: The O2 performs, as demonstrated by measurements, very similarly with just about any headphone load and is audibly transparent with the HD650. There’s nothing about the HD650 that alters the signal delivered by the O2 in an audible way. It would be useful to measure the other amplifiers used for comparison in the review. It’s possible one or more are less accurate than the O2 which might create an audible difference. See the Tech Section for more.

A BETTER METHOD: Instead of pretending we’re somehow exempt from inevitable filtering and expectation bias, a much better approach is to simply remove the expectations. Odds are extremely high if the authors had used level matched blind testing they would have reached very different conclusions. This can even be done solo with an ABX switching setup allowing instant switching to stay within the critical 0.2 second window. The switching can be done frequently, hours apart, or over weeks. You can listen to 10 seconds of a revealing excerpt or 10 entire CDs. In the end, the ABX set up reliably indicates there’s an audible difference. If a difference is detected, an assistant can be used to help blind audition both and determine which is preferred.

SUBJECTIVE REALITY: I’ve done the above blind testing using my own HD650 headphones and Benchmark DAC1 Pre. The O2 sounded so similar to the $1600 Benchmark I, and another listener, could not detect any difference. Simple logic tells us if what Lieven wrote about the O2/HD650 is true, it would also likely hold true for the Benchmark DAC1 Pre/HD650 or indeed any sufficiently transparent headphone amp. Considering the DAC1 is a Stereophile Class A (their highest rating) headphone component and rave reviewed by numerous other high-end audiophile reviewers, there seems to be a serious disconnect somewhere. At least one of the reviewers must be wrong and the Headfonia authors are far outnumbered.

A REVIEWER’S DIFFICULT CHOICE: Subjective reviewers, like Lieven, have three basic choices in how they want to view a piece of gear like the O2 that measures very well: (photo by: striatic CC)

A REVIEWER’S DIFFICULT CHOICE: Subjective reviewers, like Lieven, have three basic choices in how they want to view a piece of gear like the O2 that measures very well: (photo by: striatic CC)

- Try to wrongly claim all transparent gear sounds different than other transparent gear and make up weak excuses to avoid blind testing demonstrating what differences they can really hear.

- Try to claim non-transparent (i.e. “colored” lower fidelity) gear with added distortion is somehow better than transparent gear. This would condemn all transparent gear, even some really expensive gear, to being equally “inferior” as the O2.

- Concede all audibly transparent gear is accurate and generally will sound the same. They don’t have to like the sound of transparent gear, but they would accept giving the O2 a negative review means also giving a Violectric, DAC1, and lots more high quality headphone amps an equally negative review.

WHEN THERE REALLY ARE DIFFERENCES: Sometimes gear really does sound different for some good reasons. I provide examples in the Tech Section. The Headfonia review, and related comments, have argued lower fidelity “colored” amps are somehow better than accurate high fidelity amps like the O2. This is often called “synergy” or “euphonic distortion” and it’s a matter of personal taste but it does have some very significant downsides. (photo by: tico cc)

WHEN THERE REALLY ARE DIFFERENCES: Sometimes gear really does sound different for some good reasons. I provide examples in the Tech Section. The Headfonia review, and related comments, have argued lower fidelity “colored” amps are somehow better than accurate high fidelity amps like the O2. This is often called “synergy” or “euphonic distortion” and it’s a matter of personal taste but it does have some very significant downsides. (photo by: tico cc)

TWO WRONGS DON’T… The synergy theory goes something like: “Because headphones are imperfect it’s OK to pair them with imperfect amps that help compensate for the headphone’s flaws.” Someone might pair bright headphones with an amp having poor high frequency response to help tame the nasty highs. But what happens when you upgrade to say the HD650 with relatively smooth natural highs? Now your headphone amp is going to sound dull and lifeless and there’s no way to fix it. The generally accepted goal, for many solid reasons, is the entire audio reproduction signal chain should be as accurate and transparent as possible right up to the headphones or speakers. That’s what recording engineers who mix our music, and manufacturers of our headphones and speakers, are counting on. If you want different sound see the next paragraph.

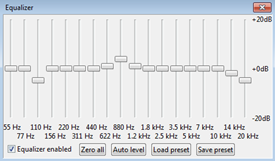

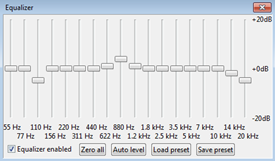

AMPS & DACS MAKE LOUSY EQUALIZERS: If you want to alter the sound a much better method than using a colored amp or DAC is using EQ. That’s what it’s for. There are even decent EQ apps for iOS and Android. For those purists who somehow think DSP or EQ is wrong, odds are your favorite music has already had significant DSP and/or EQ applied. There’s an example in the Tech Section showing even two passes of digital EQ are entirely transparent. Using a headphone amp to compensate for a flaw with your headphones is like buying a music player that can only play one song.

AMPS & DACS MAKE LOUSY EQUALIZERS: If you want to alter the sound a much better method than using a colored amp or DAC is using EQ. That’s what it’s for. There are even decent EQ apps for iOS and Android. For those purists who somehow think DSP or EQ is wrong, odds are your favorite music has already had significant DSP and/or EQ applied. There’s an example in the Tech Section showing even two passes of digital EQ are entirely transparent. Using a headphone amp to compensate for a flaw with your headphones is like buying a music player that can only play one song.

NOT SO EUPHONIC: Amps or DACs that contribute what’s often called “euphonic distortion” are a lot like using them as equalizers above. An amp with “euphonic distortion” might help mask other problems by adding audible crud to your music but that’s putting a band aid on the problem instead of fixing what’s really wrong. You’re stuck with the added crud from euphonic gear no matter what. Even with the most pristine audiophile recording with low distortion headphones, your gear is still going to dumb down your music with its ever present contributions. If you’re really craving say the sound of a vintage tube amp, it can be done rather convincingly in software via DSP. That way you can at least turn the crud on and off as desired.

IT’S A PACKAGE DEAL: Sadly most “colored” and “euphonic” audiophile gear also has other problems. To get that soft high end you’re after you might end up with a tube amp and also get a very audible 1% THD. Or, like some Schiit Audio products have done, your “esoteric” amp may destroy your expensive headphones. Gear with lower fidelity in one area is much more likely to have problems in other areas.

FIDELITY DEFINED: The “Fi” in “Head-Fi” is supposed to be fidelity, as in “high fidelity”. If you look up the words, you’ll find it means “being faithful”, “accuracy in details” and “the degree to which an electronic device accurately reproduces sound”. Note the words “accuracy” and “electronic”. Accuracy is something that can be measured in most electronic audio gear (DACs, amps, etc.). So if “high fidelity” is really the goal why would anyone be opposed to measurements that support that goal? Ask yourself that question next time you encounter someone dismissing or downplaying audio measurements.

MAGIC: Some compare the supposedly better sound heard from high-end gear to “magic”. Lieven quotes Nelson Pass claiming “It is nevertheless possible to have a product that measures well but doesn’t sound so good. It is still a mystery as to how this could be.” In effect Pass appears to be saying amplifier sound is mystical. But it’s not. Lots of credible published research demonstrates measurements predict sound quality. If Pass is so confident measurements don’t tell the whole story, where’s his published blind listening test demonstrating his view? Such a result would certainly help sell more of his five figure products so he has a strong incentive to publish such a test. Still, magic is an interesting analogy. Magicians know how to exploit weaknesses in our senses to deceive us. Convincing someone a $2000 power cord really sounds better is a similar act of sensory deception. (photo by: Cayusa CC)

MAGIC: Some compare the supposedly better sound heard from high-end gear to “magic”. Lieven quotes Nelson Pass claiming “It is nevertheless possible to have a product that measures well but doesn’t sound so good. It is still a mystery as to how this could be.” In effect Pass appears to be saying amplifier sound is mystical. But it’s not. Lots of credible published research demonstrates measurements predict sound quality. If Pass is so confident measurements don’t tell the whole story, where’s his published blind listening test demonstrating his view? Such a result would certainly help sell more of his five figure products so he has a strong incentive to publish such a test. Still, magic is an interesting analogy. Magicians know how to exploit weaknesses in our senses to deceive us. Convincing someone a $2000 power cord really sounds better is a similar act of sensory deception. (photo by: Cayusa CC)

BUT IT SOUNDED BETTER EVEN IN THE NEXT ROOM! Anyone who’s read many of the blind vs sighted debates has probably come across someone claiming their wife even commented how it sounded better and she was three rooms away in the kitchen. That phenomena was explained by Stuart Yaniger in his recent article Testing One Two Three. It turns out many audiophiles have a few favorite test tracks they cue up after making changes to their system. The test tracks are an obvious clue to their spouse they probably changed something in their system. And, spouses being spouses, sometimes respond with the audio equivalent of “that’s nice dear”. Sit down and do a blind comparison if you really want to know the truth—preferably in the same room as the speakers.

IF THIS IS ALL TRUE WHY DON’T MORE PEOPLE KNOW OR TALK ABOUT IT? The biggest single reason is there’s an enormous amount of money behind high-end audio. It’s largely built on the belief that spending significantly more money on high-end gear yields better sound. Pick up nearly any magazine, or visit nearly any website, and you’ll read how a $3000 DAC sounds better than a $300 DAC. But is that really true or is it just a self-serving perception that exploits the weaknesses in our auditory abilities? The industry has carefully cultured and crafted a lot of perceptions over the last few decades and some of it has been organic—especially given how audio forums work.

SOME IMPORTANT HISTORY: High-end audio was nearly non-existent in the 1970s short of a few companies like McIntosh and even those marketed their gear around objective criteria. If anyone tried to sell a $2000 power cord in 1980 they would have been laughed out of business but now there are dozens of companies selling them. The wine industry has followed a very similar path. In 1980 people tended to buy wine they thought tasted good. But, courtesy of decades of clever marketing, wine has been made out to be much more complex and important. An entire industry in the USA has grown up around wineries, wine tourism, wine bars, tastings, pairings, flights, expert reviews, etc. Consumers have been taught to doubt their own ability to judge wine and instead trust expert wine ratings with their point scores and elaborate tasting notes. And, not surprisingly, the experts tend to rate more expensive wines much higher. It’s scary how similar it is to high-end audio. Compare “the Warped Vine Cabernet was leathery with hints of green pollen” to “the UberDAC had a congested sense of pace and tarnished rhythms”. Check out the books by Robin Goldstein, Mike Veseth, Jonathan Nossiter, and the documentary Mondovino.

CONDESCENDING LINGO: Like the wine industry, the audio industry has invented their own complex secret language. This is exposed as a clever marketing technique in the books referenced above. The vague complex language makes the average person feel they’re not qualified know what to buy and should defer to the “experts” (who in turn promote more expensive products). So next time you read about slam, pace, congestion, rhythm, blackness, sheen, timing, or other words that could have many meanings, consider it marketing mumbo jumbo and think twice about trusting anything else that person has to say. Misappropriating words to mean something that’s ill-defined and vague isn’t doing anyone any favors except those hawking overpriced gear.

CREATING DOUBT: Someone might have a favorite inexpensive wine but the wine industry often succeeds in making them wonder if it’s “good enough” to serve to guests. They’re tempted to ignore their own perceptions and instead buy something that’s well rated and more expensive. This is good for the wine industry but bad for consumers. (photo by: Mister Kha CC)

CREATING DOUBT: Someone might have a favorite inexpensive wine but the wine industry often succeeds in making them wonder if it’s “good enough” to serve to guests. They’re tempted to ignore their own perceptions and instead buy something that’s well rated and more expensive. This is good for the wine industry but bad for consumers. (photo by: Mister Kha CC)

AUDIO DOUBT: The high-end audio industry mirrors the wine industry. Websites like Head-Fi create massive amounts of doubt among those who spend much time there. I’ve received hundreds of messages like:

“I have the $200 UltraCan Five headphones and I thought they sounded really good with my iPod. But then I found Head-Fi and read the new $500 UberCan Golds are a lot better but they apparently need at least the $500 UberAmp Titanium to really perform. Should I spend $1000 to upgrade?”

SAD STORIES: I read messages like the one above and it makes me sad. Here’s this college student, probably eating macaroni and cheese for dinner, contemplating spending $1000 more than they’ve already spent to listen to music on their $200 iPod? And this is from a person who, until they found Head-Fi, thought they already had really good sound! In my opinion it’s just wrong—especially when the doubt is largely based on flawed listening perceptions and often hidden commercial influences.

BEHIND THE SCENES AT HEAD-FI: The above is the marketing genius of sites like Head-Fi and Wine Spectator. This all plays into the Head-Fi recipe:

- Censorship – Head-Fi bans discussion of blind testing on 98% of the site and they have censored or banned members who speak out against Head-Fi or their sponsors. They take many actions to heavily tilt perceptions in favor of visitors spending money they probably don’t need to spend.

- Questionable Gear - Some of the most hyped gear on Head-Fi, in my opinion, is snake oil—Audio-GD, NuForce and Schiit Audio are three examples arguably based on hype rather than solid engineering. Some of it is sold through very limited sales channels and Head-Fi is probably responsible for the majority of the sales.

- Massive Advertising - Head-Fi is a banner farm of advertising that includes $1000 iPod cables. The advertisers want to capitalize on the doubt created among Head-Fi visitors and having the moderators and admins acting on their behalf.

- Infomercials – Jude, and others at Head-Fi, produce what many consider “infomercials” promoting products for Head-Fi sponsors. When Wine Spectator granted the Award of Excellence to Robin Goldstein’s fake restaurant they suggested he also buy an ad on their website. Those sorts of cozy behind the scenes relationships are present in the audio industry as well. See Stereophile Exposed below.

- Biased Evangelists – A disproportionate number of Head-Fi posts are made by what some consider shills who “evangelize” and fiercely defend products sold by Head-Fi sponsors. Some might be just rabid fanboys, but if you look at the products that receive the most positive, and greatest number of, comments they’re usually from Head-Fi sponsors and are often somewhat questionable products that probably wouldn’t do nearly as well left to their own (lack of) merits.

- Deception – To the casual visitor, Head-Fi just seems to be an open forum where average people can talk about headphone stuff. But it’s really owned by a commercial entity that’s using it as a marketing machine. And it’s not “open”—see Censorship above. It’s a private forum run for profit. From what I understand the moderators and admins receive a steady stream of free gear while Jude receives all that plus serious income. It’s no longer just a hobby to these guys.

SAFE MYTH BUSTING: There’s a great show on the Discovery Network called MythBusters. They pick a few myths for each episode and set out to see if they’re true. To my knowledge they nearly always avoid busting myths that might cause serious financial harm to anyone and instead focus on things like toilets exploding when used by cigarette smokers. This same “courtesy” is almost universally extended by the media covering high-end audio. The past has shown crossing the line is dangerous.

THE AUDIO CRITIC: Peter Aczel set out many years ago to expose myths in audio with his magazine and website The Audio Critic. He had a relatively small but significant and loyal following. But, progressively and sadly, he was marginalized in a variety of ways. Today nearly all audio journalists are inexorably in bed with the manufacturers in one way or another. And if you’re openly pro-Obama you don’t get to work on Mitt Romney’s political campaign. (photo: The Audio Critic)

THE AUDIO CRITIC: Peter Aczel set out many years ago to expose myths in audio with his magazine and website The Audio Critic. He had a relatively small but significant and loyal following. But, progressively and sadly, he was marginalized in a variety of ways. Today nearly all audio journalists are inexorably in bed with the manufacturers in one way or another. And if you’re openly pro-Obama you don’t get to work on Mitt Romney’s political campaign. (photo: The Audio Critic)

STEREOPHILE QUESTIONED: For those who think I have an axe to grind, it’s nothing compared to Arthur Salvator’s axe. He strongly questions the credibility of John Atkinson and Michael Fremer at Stereophile. Whatever his personal bias, he presents what appears to be factual data implying Stereophile has heavily favored advertisers and selling magazines over being objective audio journalists. Sadly, as with Wine Spectator’s Excellence Awards, I believe this is likely to happen whenever you can follow the money from their paychecks up the chain to the manufactures of the products they’re supposed to be reviewing.

DECEPTION RUNS DEEP: I used to work in high-end audio. I can attest many believe the “party line” as they’re more or less immersed in it. It’s much easier to dismiss the rare blind listening test than consider their own industry is largely based on erroneous myths. Believing the earth is flat allows them to sleep better at night. So keep in mind even those selling $2000 power cords might genuinely believe their product sounds better. They likely listened with the same expectation bias described above and hence are believers. But that doesn’t mean lots of expensive gear really does sound better.

THE GOLD STANDARD: Across many branches of science blind testing is the gold standard for validating if things work in the real world. It’s well accepted for use where human life is at stake in medicine, forensics and physics. So it’s stunning when audiophiles claim it’s an invalid technique with mountains of evidence to the contrary dating back to the 1800’s. The Tech Section addresses all the common complaints about blind testing. But the short version is there are no practical objections when you do it right.

BLIND AVOIDANCE: Perhaps the most interesting disconnect in the industry is the almost universal avoidance of blind testing. If someone really believes their expensive gear sounds better why not try a blind comparison? This is where things get fuzzy about what many in the industry really believe. I would think many would be genuinely confident and/or curious enough to at least try some blind testing. But, almost universally, they refuse to participate in blind tests and often actively attack blind testing in general. Why? Deep down is it because they’re worried the expensive gear, cables, etc. really don’t sound better? Did they secretly try a blind test and failed to hear a difference? Or have others convinced them blind testing is a total sham and the earth is really flat?

BLIND DONE WRONG: When blind testing is used by someone in the industry they arguably misuse it and retain the very expectation bias blind testing is supposed to remove. Some examples of “blind abuse” are in the Tech Section.

FANTASIES: Another way to explain the avoidance of factual information is some simply enjoy the fantasy. High-end audio is a serious hobby for many. For some constantly swapping out gear is a big part of the fun. Blind testing could, obviously, jeopardize some of their enjoyment. One person’s hobby is their own. But if I imagine wine tastes better when consumed from vintage Waterford crystal should I really be trying to convince others to get rid of their pedestrian wine glasses and spend a lot more on vintage crystal because the wine tastes better? This is exactly what most audiophiles are doing at sites like Head-Fi, Headfonia, and many others. They’re convincing others to spend more money with the promise of better sound when they’re often not getting better sound.

FANTASIES: Another way to explain the avoidance of factual information is some simply enjoy the fantasy. High-end audio is a serious hobby for many. For some constantly swapping out gear is a big part of the fun. Blind testing could, obviously, jeopardize some of their enjoyment. One person’s hobby is their own. But if I imagine wine tastes better when consumed from vintage Waterford crystal should I really be trying to convince others to get rid of their pedestrian wine glasses and spend a lot more on vintage crystal because the wine tastes better? This is exactly what most audiophiles are doing at sites like Head-Fi, Headfonia, and many others. They’re convincing others to spend more money with the promise of better sound when they’re often not getting better sound.

FANTASIES ARE NOT ALWAYS HARMLESS: Some have told me I’m pulling the beard off Santa Claus and I should leave deluded audiophiles to their harmless fantasies. But the fantasies are not harmless when, collectively, the often repeated myths are costing people tens and probably hundreds of millions of dollars. Not everyone buying high-end gear has money to burn and reads the Robb Report. Many just want better sound. They don’t need $1000 DACs, power conditioners, expensive cables, etc. to get the sound they’re after yet they’re told over and over they do need those things.

WATERFORD CRYSTAL: I think most would agree the difference between regular glass and crystal doesn’t change the actual taste of wine but it’s fair to say it might change the overall experience. There’s nothing wrong with buying and using expensive crystal wine glasses. But I believe it’s crossing an important line when you try to convince others the crystal itself makes the wine actually taste better. (photo by: foolfillment CC)

WATERFORD CRYSTAL: I think most would agree the difference between regular glass and crystal doesn’t change the actual taste of wine but it’s fair to say it might change the overall experience. There’s nothing wrong with buying and using expensive crystal wine glasses. But I believe it’s crossing an important line when you try to convince others the crystal itself makes the wine actually taste better. (photo by: foolfillment CC)

MONEY WELL SPENT: I’m not suggesting there’s no reason to buy expensive audio gear. I own a $1600 DAC. There can be satisfying reasons to spend more including higher build quality, more peace of mind, the heritage and reputation behind certain manufacturers, better aesthetics, and other less tangible benefits. But, for the sake of those interested in getting the best sound for their money, it’s best not to confuse sound quality with those other benefits. Just as drinking from crystal doesn’t improve the actual taste of the wine itself. Buying a pre amp with a massive front panel and $2000 power cord doesn’t improve the sound even if it might improve the experience for some. I’ll be covering this in much more detail soon in my upcoming Timex vs Rolex article.

ONE MAN’S BOOM IS ANOTHER MAN’S BASS: Perhaps the most accurate headphones I own are my Etymotic ER4s but they’re also among the least used. Why? They’re just not very exciting to my ears and are ruthlessly revealing of poor recordings. I want to be clear nobody can dictate what’s best for someone else when it comes to headphones, speakers, phono cartridges, and signal processing (including EQ). All of these things alter the sound to varying degrees so it comes down to individual preferences as to which is best. But, to be clear, I’ve also presented good reasons why it’s worthwhile for everything else in the signal chain to be as accurate and transparent as possible—that is to have the highest fidelity. Just because I like say a bit of bass boost doesn’t mean I should go buy a headphone amp with a high output impedance that makes my HD650’s sound more boomy. That same amp would be a disaster with my Denon D2000s, Etymotics or Ultimate Ears. It’s much better to choose a headphone with more bass, like my DT770, or use some EQ to punch up the bass to my liking. Then I can use my headphone amp with any headphone and I can have my cake and eat it too.

WHERE’S THE LINE? I can understand wanting to own high quality equipment. But where do you draw the line between subjective enjoyment and blatant snake oil and fraud? Is a $3000 “Tranquility Base” with “Enigma Tuning Bullets” from Synergistic Research more like Waterford crystal or a magnet to clamp on the fuel line in your car claiming to double your gas mileage? What about Brilliant Pebbles--a $129 (large size) jar of rocks that are supposed to improve the sound of your audio system? These are example companies presented in the myths video (Tech Section). Should such products be able to stand up to blind testing? Does it matter if the claimed benefits are real? To me it’s a slippery slope once you start agreeing with the “blind tests don’t work” crowd as the possibilities for unverifiable claims are endless.

TRUTH IN ADVERTISING: Many countries have laws against false claims and sometimes it’s enforced via legal action. For example, companies claiming unproven health benefits for products answer to the FDA. And Apple is currently being sued for misrepresenting Siri’s capabilities in their TV ads. Should audio companies be held to similar standards or at least be required to use a disclaimer when claims are unproven? When do claims enticing people to spend lots of money become fraudulent?

THINGS TO CONSIDER: If this article has you wondering just what’s real and what isn’t I would suggest considering some of the following:

- Focus On What Matters To You – If you only want the best sound for your money don’t let others make you doubt your own enjoyment or convince you to spend more than necessary for things you can’t hear or don’t need. Likewise if you want a headphone amp that you can hand down to your kids in 20 years don’t let someone like me keep you from buying a Violectric V200 instead of an O2 even if they sound the same. The Violectric is better made. Of course your kids may have audio brain implants in 20 years.

- Your Tastes Are Your Own – Subjective reviews are just that: Subjective. Don’t assume you will hear what a reviewer claims to have heard. We all hear differently, have different preferences, etc.

- Music is Art but Audio is Science – Don’t confuse the art of being a musician with the science of reproducing recorded music. What an amplifier looks like is art, but what it does with an audio signal is entirely explained by science.

- Use Common Sense – Be aware the controversy over blind testing is almost like a religious war. But try to step back and use common sense, consider the source, references, credible studies, etc. Follow the links in this article to additional resources. Consider the huge wealth of scientific evidence in favor of blind testing, measurements, what matters, etc. vs the subjective claims with little or no objective evidence of any kind to back their claims. If differences between cables, power cords, preamps, etc. were really so obvious don’t you think someone would have set up a credible blind test demonstrating the benefits by now?

- Consider The Source – When you’re reading about gear, testing, reviews, etc, always consider the source. What gear does the author own? Who do they work for? Where does their (and/or their employer’s) income mostly come from? Are they selling audio gear? Do they receive free gear? Do they otherwise have a vested interest in promoting the status quo? I’m not saying everyone is hopelessly biased by those things but they often contribute bias to varying degrees (see Stereophile Exposed above and the wine references).

- Try Free ABX Software – This will allow you to conduct your own solo blind tests comparing say a CD quality 16/44 track with a 24/96 track. See the Tech Section for more details.

- Enlist A Friend (with a good poker face) – If you’re convinced that new gear sounds better find someone else to randomly connect the new gear or old gear in a way you don’t know which you’re listening to. Keep everything else as consistent as possible including the volume, music used, etc. See if you can identify the near gear. If you do, repeat the experiment several times keeping score of your preferences while your friend keeps score of which one was really connected. Compare notes when you’re done.

- Eliminate Other Variables & Clues – If you’re trying to compare two pieces of gear do everything you can to eliminate anything that might cause you to suffer expectation bias or provide even subtle clues as to which is which. Let’s say someone is plugging your headphones, behind your back, randomly into source A or source B. You need to make sure the sound the plug makes going into the jack isn’t different enough to be recognizable. Also make sure the music is the same, not time shifted, one player might click when starting or has audible hiss and the other doesn’t, etc. Make sure the person helping either have a poker face or stays out of sight and doesn’t say anything as they may provide clues otherwise. And if you suspect someone else is hearing differences that likely don’t exist look for these kinds of clues that might be tipping them off—even subconsciously.

OTHER RESOURCES AND REFERENCES: In addition to all the links sprinkled around this article, check these out for more information:

- Subjective vs Objective Debate – My first attempt at tackling some of this last spring

- Music vs Sine Waves – An article I wrote debunking some myths about audio measurements.

- Headphone Amp Measurements – An article I wrote for InnerFidelity on amp measurements

- 24/192 Music Downloads – High resolution 24 bit music has to sound better, right? Or does it?

- Matrix Blind Audio Test – A relatively simple and revealing blind test that’s easy to understand

- Audio Myths Workshop Video – This is a somewhat technical presentation but it has lots of simple common sense examples. See the Tech Section for more details and how to obtain the high quality example files used in the second half to listen for yourself.

- Why We hear What We Hear – A technical article on the human auditory system by James Johnston that goes into more detail about what we hear.

- Dishonesty of Sighted Listening – An excellent technical paper by Sean Olive on evaluating speakers with blind vs sighted listening.

- Hydrogenaudio – A geekfest of mostly objective audio folks. Lots of blind tests, analysis, and more.

- Audio Differencing – Consider trying out the free software from Liberty Instruments and their test files. Do capacitors really change the sound?

- Science & Subjectivism in Audio – An information packed page by Doug Self covering this topic.

- Meyer & Moran Study – Over 600+ trials even audiophiles couldn’t hear a difference between CD and SACD high resolution audio.

THE FUTURE: Look for my “Timex vs Rolex” article where I’ll cover what you get as you spend more, what to look for based your priorities, etc. I’ll also be comparing some typical gear at widely different price points and exposing some high-end gear with low-end performance.

BOTTOM LINE: We can’t “trust our ears” the way we’re usually led to believe we can. And we can’t trust the ears of reviewers for the same unavoidable reasons. I’m not pretending I can write a few articles and change the minds of thousands of audiophiles—others before me have tried. But even if I only help a few save some serious money by focusing on what’s real, I’ve succeeded. Next time you encounter someone you think might be wrongly arguing for spending more money in the interest of better sound, consider providing a link to this article. If they read it and decide the earth is really still flat, or they want to spend more anyway, it’s their choice. But at least the information helps anyone who’s interested make a more informed decision.

TECH SECTION

INTRO: Below are further details on some of the references I used for this article and some added information for the more technically inclined.

BLIND OBJECTIONS: There are several typical objections to blind testing. But nearly every one has been well addressed. They include:

- Switchgear Masking – Some try to argue the relays or switch contacts, extra cabling/connectors, etc. associated with some blind testing somehow masks the differences between gear. But there have been many blind tests without any extra cables or gear. See the Matrix Audio Test as one example where humans simply swapped cables. What’s more, the music we listen to has already been “masked” by all the unseen cabling and switchgear used in producing music. A lot of high end and well respected gear is full of switches and relays. Objection removed.

- Listening Stress – Some blind tests are conducted by others administrating the test and listeners may feel some pressure to “perform”. But an ABX box can be used solo, in their own home, under exactly the same conditions they normally listen. Objection removed.

- Listening Duration – It’s often said you can’t appreciate the differences between gear by listening to just one track or for just a short period of time before switching. An ABX box can be used at home for whatever duration desired. You can listen to Gear A for a week, then Gear B for a week, and finally Gear X for a week before casting your vote. That could go on for as long as you want. Objection removed.

- Unfamiliar Environment – Blind tests are often criticized for being conducted in an unfamiliar environment. Never mind audiophiles can pop into a booth or room at a noisy trade show and claim to write valid reviews of how the gear sounded. But it’s entirely possible to do blind testing in your own home, with your own gear, your own music, at your own leisure, etc. See above. The Wired Wisdom blind test, for example, was done in the audiophile’s homes. Objection removed.

- The Test System Wasn’t High Enough Resolution – See above. Blind tests have been done with audiophile’s personal $50,000 systems and the results are consistently the same. Objection removed.

- Statistically Invalid – There have been some tests with marginal results that leave room for the glass half empty or glass half full approach as to their statistical significance. But there have been many more that have had a valid statistical outcome over many trials. The math is clear and well proven. Objection removed (for well run tests with clear outcomes).

- Listening Skill – Some claim blind tests fail because the listeners involved were not sufficiently skilled. But it’s well established the filtering and expectation bias of our hearing is involuntary—no amount of skill can overcome it. It even trips up recording engineers. Ultimately, if say a dozen people cannot detect a difference, what are the odds you can? Objection largely removed.

- Flawed Method – It’s always possible for someone to find flaws with the method. It’s like a candidate losing a political election, demanding a ballet recount, and finding a dead person cast one vote—never mind they need another 7032 votes to win. They want to try and invalidate the election on an insignificant technicality for entirely biased reasons. The same audiophile claiming to listen to a piece of gear for 5 minutes at a trade show and have a valid opinion about how it sounds somehow claims noise on the AC power line masked all differences in a blind test. Really?

- It’s Not Real Proof – This is the ultimate weapon for the blind critics. Anyone can always claim something like “it’s possible when rolling dice to get double sixes many times in a row so your test doesn’t prove anything.” And, technically, they’re correct. But, in practice, the world doesn’t work that way. If they really believe in beating impossible statistical odds, they should be spending their time in a Las Vegas casino not writing about blind testing. Drug manufactures are not allowed to tell the FDA it’s possible their drug is really better than the placebo even if the statistical outcome of their carefully run trials indicates otherwise. There are well established standards that are accurate enough to use where human life is at stake but some audiophiles still, amazingly, try to claim these methods are somehow invalid.

WHEN BLIND IS FLAWED: Some in the industry, and the odd individual, have arguably misappropriated blind listening. For example, the UK magazine Hi-Fi Choice conducts what they call “blind listening group tests”. But instead of following proven and established guidelines for blind testing to determine if the listeners can even tell two pieces of gear apart, they seem to let their judges listen to gear as they choose over multiple listening sessions and take notes on their impressions without presumably knowing which gear is playing. This method suffers from much of the same filtering and expectation bias as sighted listening as they’re expecting differences so they hear differences. As far as I can tell (the published details are vague), they’re not directly comparing two pieces of gear looking for statistically significant differences over several controlled trials. There doesn’t seem to be any voting or scoring to determining what differences are real versus simply a result of expectation bias. Studies show one might blow their entire method out of the water by simply running their usual test with say 5 different amplifiers but, instead of playing all 5, repeat one of them twice and leave one out. I’ll bet you serious money they would hear all sorts of differences between the two sessions with the same amplifier playing. This has been done in blind wine tasting and some of the greatest perceived differences were between identical wines poured from the same bottle (check out the wine tasting podcast interview).

UNLISTENABLE: This guy claims to have done a blind test with 5 people and 10 trials each between two pieces of gear. He further claims “Every listener described the Halcro as being 'unlistenable'”. I’m sorry but if something is so bad as to be “unlistenable” do you really think you need a blind test to figure that out? That’s like comparing a good Cabernet to drinking vinegar. Also, for what it’s worth, he doesn’t seem to understand the difference between THD and THD+N where the “N” stands for noise. What he’s really measuring isn’t distortion, as he implies, but the noise floor. I encourage everyone to apply common sense to what they read and consider the source.

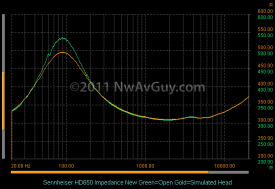

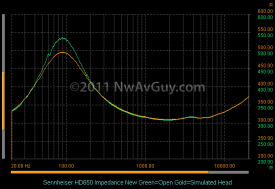

OUTPUT IMPEDANCE: I’ve talked a lot about the importance of output impedance. Since I wrote my Output Impedance article in February 2011, Benchmark Media published a paper of their own that explores it from some other angles. Both are well worth a read. The graph to the right shows the impedance of the HD650 both in free air and clamped on head. there’s a big broad impedance peak centered at about 90 hz. If someone uses an amp with a sufficiently high output impedance the frequency response will also have a broad audible peak centered around 90 hz. The higher impedance can also reduce the damping and increase distortion. Combined, this could cause what many might might describe as more “boomy” or “bloated” bass. Someone else, however, might think it sounds more “warm” or “full”. Regardless, the change would generally be considered the opposite of “thin” as used by Lieven. It’s possible his typical amp for the HD650 has relatively high output impedance so that’s what he’s used to and considers “normal” for the HD650.

OUTPUT IMPEDANCE: I’ve talked a lot about the importance of output impedance. Since I wrote my Output Impedance article in February 2011, Benchmark Media published a paper of their own that explores it from some other angles. Both are well worth a read. The graph to the right shows the impedance of the HD650 both in free air and clamped on head. there’s a big broad impedance peak centered at about 90 hz. If someone uses an amp with a sufficiently high output impedance the frequency response will also have a broad audible peak centered around 90 hz. The higher impedance can also reduce the damping and increase distortion. Combined, this could cause what many might might describe as more “boomy” or “bloated” bass. Someone else, however, might think it sounds more “warm” or “full”. Regardless, the change would generally be considered the opposite of “thin” as used by Lieven. It’s possible his typical amp for the HD650 has relatively high output impedance so that’s what he’s used to and considers “normal” for the HD650.

LEVELS MATTER: If the level were just a few dB lower when Lieven listened to the O2 that would, relatively, make it sound more “thin” because of the Fletcher-Munson curves and psychoacoustics. Studies show even a 1 dB difference creates a preference for the louder source. But most publishing gear reviewers don’t even have an accurate way to match levels. That’s a serious problem.

TESTING ONE TWO THREE: As mentioned in the previous section, Stuart Yaniger, an engineer who designs tube audio gear, recently wrote an excellent print article titled Testing One Two Three (it’s not on the web that I know of). The article has excellent advice for how to properly conduct blind testing and he outlines some of his blind listening experiments:

- The Bastard Box – The author made an audiophile grade box comparing a $300 audiophile capacitor with a $1 capacitor from Radio Shack with the flip of a switch. He correctly identified the audiophile capacitor in blind listening only 7 of 12 times. Pure random guessing would be 6 of 12. By the statistical guidelines for blind testing, he needed to be correct 10 of 12 times for a meaningful (95% confidence) result. He failed so Radio Shack wins.

- Audibility Of Op Amp Buffers – He strung together 10 cheap op amp buffers in series to effectively multiply their flaws and did a blind ABX comparison of no buffers vs ten buffers. He found he could reliably hear the difference, so a buffer was removed (down to 9) and the test repeated. In the end he found with 6 or fewer buffers strung together he could not reliably detect any difference. This is an excellent example of blind testing both demonstrating a clear difference and then demonstrating the opposite-no audible difference. Blind testing let the author easily find the threshold of audible transparency (6 buffers). It’s also further proof that op amps are not the evil devices many audiophiles think they are when even six cheap ones strung in series can be transparent enough to sound just like a piece of wire. See: Op Amp Myths.

- Listener Fatigue – The author devised a method to compare two pre amps for detectable “listening fatigue” by devising a means to blindly and randomly listen to one or the other with two hour meters recording the total listening time of each. If one was less fatiguing, in theory, it should accumulate more total listening hours. After two months of listening to both there wasn’t what he considered a significant difference in the total times implying both had similar levels of fatigue or no fatigue.

WHY WE HEAR WHAT WE HEAR: James “JJ” Johnston has lots of experience in audio ranging from Bell Labs to DTS. He’s made many presentations on audio and has spoken at Audio Engineering Society events. He’s recently re-formatted one of his presentations into an online article entitled Why We hear What We Hear. It’s an interested read with some valuable technical information on how our auditory system works. His article is a primary reference for my earlier comments about how we filter sound. His information correlates well with information from neurologists and brain experts as well as multiple AES papers on listening bias. Here are some highlights:

- Expecting Differences - Perhaps the most important thing to take away from Johnston’s work is on the last (fourth) page. He explains how when we listen for different things we will always hear different things depending on what we expect to hear. In other words I can play the same track for a person twice, with everything exactly the same, but if they’re expecting a difference they will in fact hear a difference. It’s not an illusion, or anything they can control, it’s just how our auditory system and brain work. This was also demonstrated in the AES paper Can You Trust Your Ears where the listeners consistently heard differences when presented with identical audio.

- Auditory Filtering – Johnston talks about our hearing system involuntarily filtering what we hear. There are two layers of “reduction” resulting in somewhere around 1/100,000 of the data picked up by our ears ultimately reaching our conscious awareness. This was discussed in the first section with examples provided.

- Auditory Memory – Johnston points out our ability to compare two sound sources starts to degrade after only 0.2 seconds. The longer we go between hearing Gear A and Gear B the less accurately we can draw conclusions about any differences. And the longer the gap the more expectation bias creeps into what we remember. Our memory, like our real-time hearing, is by necessity highly selective. Our brains are constantly discarding large amounts of “data”. Other studies back this up including the AES paper Ten Years of ABX Testing which found near immediate comparisons were far more likely to detect differences than extended listening with at least a few minutes between changes. This is the opposite of the common erroneous belief that long term listening is more sensitive.

AUDIO MYTHS WORKSHOP: The Audio Engineering Society (AES) is made up of mostly audio professionals especially those involved with production work including CDs, movie scores, etc. Their conventions are heavily attended by recording engineers, professionals who make their living hearing subtle audio details, and the people behind the gear they use. At the 2009 Audio Myths Workshop a panel of four experts presented a lot of good information on what we think we hear and why. If you don’t have an hour to watch the video, or just want to know what to keep an eye out for, here are some highlights I found especially worthwhile:

- The Need For Blind Testing – JJ points out it doesn’t matter how skilled of listener you are, if you know something about the two things you’re trying compare, your brain is unavoidably going to incorporate that information into what what you hear and what you remember later.

- A Dirty Trick – JJ recounts a story about a fake switch being set up to supposedly switch between an expensive tube McIntosh amplifier and, in his words, a “crappy little solid state amplifier”. In reality the switch did nothing and, in either position, everyone was listening to the solid state amp. But the audiophiles listening unanimously preferred the supposed “sound” of the tube amp. They heard what they were expecting to hear. His engineer friends tended to prefer the solid state amp with only one claiming they sounded the same. This is further confirmation of expectation bias.

- Stairway To Heaven – Poppy Crum demonstrated how it’s easy to hear most anything when you’re told what you should be hearing. She played an excerpt from Stairway to Heaven backwards and there’s not much in the way of discernable lyrics. Then she put a slide up with suggested lyrics and most can easily hear exactly what’s on the slide with a fairly high degree of certainty. This is somewhat analogous to reading someone’s gear review, buying the same gear yourself, and hearing what you’ve been told you should hear.

- State Legilature – No that’s not a typo. Poppy Crum plays a news announcer clip multiple times with everyone listening carefully. The “S” in the middle of the word legiSlature was edited out and it goes largely unnoticed by the entire audience. The brain helpfully fills in the missing letter for you automatically. Despite listening carefully, the audience of audio professionals mostly fails to hear the obvious problem because their brains automatically “invent” the missing audio information.

- Crime Suspect – They play a TV clip of a theft occurring in a classroom. The students are asked to identify the thief. Many are so certain they can pick the right guy from a photo line up they would testify in court. But, in the end, they not only pick different photos, but they’re all wrong despite their certainty. It’s further proof of how the brain filters and how unreliable our senses can be even when we think we’re certain.

- Argue To The Death – They talk about experiences on various audio forums where people argue subjective claims to the extreme using the argument “you can’t prove it doesn’t sound better”. No, but we can prove if typical people under realistic conditions can detect any difference with blind listening. Here’s a humorous parody of a typically “passionate” forum discussion (about hammers) that’s, sadly, analogous to some audio threads I’ve seen.

- Identical Results – Measurements can produce consistent, reliable, and repeatable results. I can test Gear A on Monday and re-test it a month later and get very close to the same result. That’s far from true with sighted listening tests where you can get very different results 10 minutes later if someone is expecting a difference. Further John Atkinson and I can measure a DAC and we should both get very similar measurements as long as we use accepted techniques and publish enough details about the test conditions. He can verify my measurements, and I can verify his, with a high degree of certainty. Without carefully controlled blind testing, that’s impossible to do by ear.

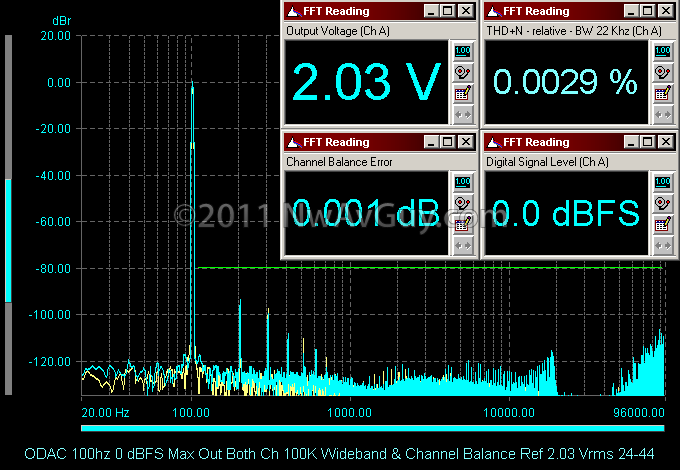

Transparency – Ethan Winer, an acoustics expert, discusses how audio electronics can be defined as audibly transparent by four broad categories of measurements and he provides criteria for complete transparency. He states that gear passing all these criteria will not contribute any audible sound of its own and in fact sound the same as any other gear passing the same criteria:

Transparency – Ethan Winer, an acoustics expert, discusses how audio electronics can be defined as audibly transparent by four broad categories of measurements and he provides criteria for complete transparency. He states that gear passing all these criteria will not contribute any audible sound of its own and in fact sound the same as any other gear passing the same criteria: - Frequency Response: 20 hz to 20 Khz +/- 0.1 dB

- Distortion: At least 100 dB (0.001%) below the music while others consider 80 dB (0.01%) to be sufficient and Ethan’s own tests confirm that (see below).

- Noise: At least 100 dB below the music

- Time Based Errors – In the digital world this is jitter and the 100 dB rule applies for jitter components. (photo: Ethan Winer)

- Comb Filtering – This is a problem with speakers and it’s likely the source of some audiophile myths. Ethan demonstrates how even changing your listening position (where your ears are in the 3D space of the room) by a few inches can create very audible changes in the high frequency response. Let’s say you sit down to listen, then get up and swap out a cable and sit down again, but this time you’re leaning further back. You may well hear differences but it’s not the cable. It’s the nature of the room acoustics and comb filtering. This is in addition to all the other reasons you might hear differences unrelated to the cable.

- Noise & Distortion Thresholds – Ethan sets up a noise track and mixes it with music at various levels to simulate similar levels of noise and distortion. I can still plainly hear the noise (on the WAV files) at –60 dB (0.1%) and barely hear it at –70 dB (0.03%) on the cello excerpt. It’s gone, to my ears, at –75 dB (0.018%). So my usual rule of thumb of –80 dB (0.01%) is further validated with some conservative margin for error. When, for example, I point out dissonant inter-channel IMD in the AMB Mini3 amplifier reached –55 dB, DAC alias components in the range of –50 dB to –25 dB, or 1% THD (-40 dB) in a singled-ended or tube amp, Ethan has helped confirm it’s entirely plausible such distortion could be audible. Here’s a link to the audio files Ethan uses in the video. The cello example, (Example2 in the WAV files), starts at about 33:30 into the video. The noise starts out for two cycles at –30 dBFS, then two cycles at –40 dBFS, and so on. Listen for yourself! When you see a spec like 1% THD you can think about the plainly audible noise at –40 dB in Ethan’s examples. This is somewhat oversimplifying the entire audibility threshold issue but it’s a reasonable worst case approximation. In this case it’s best to error on the side of being too conservative (likely the reason for Ethan’s own –100 dB transparency criteria).

- Phase Shift – Ethan demonstrates how even large amounts of phase shift applied at various frequencies is inaudible as long as it’s applied equally to both channels. What can matter is interchannel phase shift—any significant shift between the right and left channels. Relative phase is also critical in multi-driver or multi-speaker set ups (i.e. between a woofer and tweeter or front and rear speakers in a surround set up).

- Null (Differencing) Test – Towards the end Ethan talks about how null testing (which includes audio differencing) not only reveals every kind of known distortion (THD, IMD, phase, noise, frequency response errors, etc.) but also even unknown kinds of distortion. You’re literally subtracting two signals from each other and analyzing/listening to whatever is left over. With test signals this is something the dScope audio analyzer can do in real time with analog, digital, or mixed domains—analog and digital. The dScope, in effect, subtracts the test signal from the output of the device and adds the required amount of gain to boost what’s left over up to a usable level. It’s a test that can bust a lot of myths about various things that are claimed to degrade an audio signal. It can be done, using music, driving real headphones or speakers, in the analog domain (i.e. Hafler and Baxandall method scroll down) or in the digital domain (i.e. Bill Waslo’s software from Liberty Instruments). Ethan presents a few examples including the next item.

- Reverse EQ – Many people seem to think even high quality digital (DSP) EQ does irreparable harm to music. Ethan has an example where he applies EQ, then applies the exact opposite of the EQ, and then nulls (see above) the result with the original version (no EQ). He gets a near perfect null indicating even with two passes through the EQ DSP no audible damage was done.

- Other Examples – Ethan has further demonstrations aimed more at recording work rather than playback. He does an interesting “bit truncation” demo but it’s done in such a way as to make comparing the bit depths difficult.

BLIND LISTENING GUIDELINES: There’s a lot of info on how to conduct reasonably valid blind testing and some of it gets very elaborate. But don’t let that scare you from trying it. If you’ve never tried blind listening before you might be surprised how quickly it opens your eyes—even a very simple test. As many wine critics have found out comparisons immediately become much more difficult when you don’t know which is which. You can get more meaningful results from just following some simple guidelines:

- Compare only two things at a time until you reach a conclusion

- Always use identical source material with no detectable time shift between A and B

- Match levels to ideally 0.1 dB using a suitable meter and test signal

- Watch out for unintentional clues that might hint at which source is which

- Ideally perform enough trials to get a statistically valid result (typically 10 or more but even 5 can be informative)

TRYING ABX YOURSELF: Foobar 2000 has an ABX component and there are other free ABX options. This is an excellent way to say compare 16/44 FLAC files with a high resolution FLAC file. There are files available at Hydrogen audio or you can make your own if you have any good 24/96 or 24/88 demo tracks. But always do a high quality sample conversion of the high resolution track to produce the 16/44 track. Or compare a 256K AAC file with a 256K MP3 file ripped from the same CD track. Etc. What’s important is you eliminate all other differences. The levels have to be the same, etc. Audacity is free and can be used for editing the files. If the tracks are not in time sync you can configure the ABX options to restart the track each time you switch.

FOOBAR ABX: There’s a YouTube Video on ABX testing with Foobar. The software and ABX component can be downloaded here. The listener can, at any time, choose to listen to amp A, amp B, or “amp X” which will randomly be either A or B and they won’t know which. The software keeps track of “X” and lets the listener vote when they feel they know if “X” is really A or B. A normally hidden running score is kept of the votes. After ten or more votes, the final results can be revealed and checked for a statistically valid result. If a difference is detected the system can be set up by someone else so the listener doesn’t know which is A and which is B. They can then pick a favorite, again taking as much time as they like, without the unavoidable expectation bias that occurs when they know which is which.

A BLIND ANECDOTE: I received some cranky emails from someone who had read my Op Amp Myths article and basically said I must be crazy or deaf for not hearing the obvious differences between op amps. He was a serious DIYer who claimed to have designed and built a lot of his own gear. I suggested he rig up two identical pieces of gear so he could level match them and switch between them with both having socketed op amps allowing any two op amps to be directly compared. I figured he’d never bother. Much to my surprise the next email a few days later was an apology and he explained it was really eye opening and thanked me. I’m fairly sure he’s going to be approaching his future designs somewhat differently.

REAL ENGINEERING OR SNAKE OIL? I’m curious what others think of the $3995.00 BSG Technologies qøl™ Signal Completion Stage? It’s an all analog device (line-in/line-out) that, according to BSGT, “Reveals information that, until now, has remained hidden and buried in all audio signals”. How, I wonder, does it do that? The Nyquist Sampling Theorem dictates how much information can be reconstructed from a 16 bit CD. And a decent DAC, as far as I know, reconstructs all of it. In fact, a good 24 bit DAC can reconstruct data well below the 16 bit noise floor of a CD—i.e. the DAC is better than the CD itself. So, from what I know, every sample on the CD can be reliably and completely converted into an analog signal. Hence there should not be any “hidden information” on the CD. One can argue information got lost further upstream in the recording chain—i.e. at the microphones, due to the 22 Khz Nyquist bandwidth limit, etc. But that information isn’t even on the CD hence it would be quite a miracle if it could be reconstructed out of nothing. Anyone else have any theories on this nearly $4K device and their main marketing premise?

HAVE FUN! The above is just one example. I know several audio fans who have had fun discovering just what they can really hear and what they can’t using blind testing. It opens up an entirely new perspective and provides a new way to experiment with audio. It’s especially useful, and accessible, for DIYers.