INTRO: Some have asked for more details on jitter. It's a controversial topic and there are lots of myths associated with it. Here’s what I know.

INTRO: Some have asked for more details on jitter. It's a controversial topic and there are lots of myths associated with it. Here’s what I know.

TYPICAL JITTER MYTH: I read in a well regarded high-end audiophile magazine that portable players have less jitter because they use flash memory compared to a PC using a rotating magnetic hard drive. The explanation given: Because a hard drive is spinning, it must have jitter, while solid state flash memory does not. Like so many things in high-end audiophile magazines, this has absolutely no basis in fact. Jitter doesn't come from how the digital audio file is stored. It comes from how it was created, how it is sent in real-time (files are not real-time) over interfaces, and how it is re-constructed.

THE FLAC JITTER MYTH: Some argue that FLAC sounds worse than a WAVE file because the compression/de-compression process and/or CPU loading introduces jitter. This is completely false. The DAC receives the identical bit stream for either file type.

THE AES3 VS COAX VS TOSLINK MYTH: While it’s true jitter can be induced by interconnecting digital equipment, all three of the most popular standards AES3 (also called AES/EBU), S/PDIF coaxial, and S/PDIF TOSLINK optical, use essentially the same bitstream format and all combine the clock with the data itself. One format isn’t necessarily better than the other two as it very much depends on other variables. 3 feet of optical TOSLINK might easily outperform 6 feet of coax or 12 feet of AES3. It depends on the cable quality, transmitting hardware, receiving hardware, potential sources of interference, and more. The 3 standards are all prone to similar kinds of problems with AES3 being a bit more robust. Contrary to what some believe, the extra pin in AES3 is not a dedicated clock.

A REAL LISTENING TEST: I participated in a listening test at an Audio Engineering Society conference where they played test tracks with varying known levels of different kinds of jitter. It wasn't a rigid ABX or double blind test, but it was very enlightening. We, the listeners, didn't know which tracks were which until they were explained later. The most obvious audible effect was high amounts of jitter at certain frequencies sounds much like the old analog "wow and flutter" that is produced by vinyl turntables and tape recorders. Both devices have tiny deviations in speed--they might be slow variations (wow) like a vinyl record with the hole punched off-center. Or they might be fast (flutter) caused by the motor not rotating smoothly or bad bearings. Digital jitter, interestingly, often produces a rather similar end result.

PIANOS AND CYMBALS ARE CHALLENGING: Acoustic piano music seems to be an especially sensitive indicator of jitter (and wow and flutter). As the jitter increases, the notes take on a more brittle quality--that expensive Steinway Grand now sounds more like a garage-sale upright piano. And when the jitter is really high at certain frequencies, you can hear a "warble" to sustained piano notes--just like with low quality tape players or turntables. Some also say cymbals are good at revealing jitter.

WHAT IS JITTER ITSELF? Imagine a ticking clock that keeps perfect time over the long haul, but the second hand might move every 0.9, 1.0, or 1.1 seconds. If the variations average out evenly, the clock still keeps perfect time. But if you try to measure just 1 second, it might be off by 10%. That's jitter. The 44.1 Khz clock used for CD audio averages out to the right rate but the individual “ticks” may not be so accurate. These variations introduce a form of time distortion into the music. Excessive jitter is typically caused by poor design, cost savings, poor interfaces, or other shortcuts.

JITTER IN SPECTRUM VIEW: If you look at the audio spectrum both wow & flutter and jitter manifest themselves as symmetrical sidebands to a fundamental pure frequency. And very low frequency jitter causes the base of the pure tone to “spread”. The height and extent of the spread indicates the magnitude of low frequency jitter. The height and number of the side bands indicates the magnitude of higher frequency jitter.

SAMPLING ACCURACY: To properly digitize and re-construct digital audio it's relatively important the sampling intervals be as accurate as possible between the recording process and when it's played back. Random or periodic variation to these intervals along the way is jitter. It gets complicated (and controversial) to describe all the possible sources, and interactions, of jitter but some things are fairly well understood and agreed upon.

JITTER SOURCES: Clock circuits are everywhere. Pretty much anything with a CPU—right down to the little keyfob that unlocks your car’s doors—has a clock in it. For some applications, like a wristwatch, the important spec is how accurate the clock is when it’s manufactured, over time, and with changes in temperature. If it averages above or below the designed frequency the watch won’t keep accurate time. But jitter in this application virtually doesn’t matter. It could be relatively huge and the watch will still work exactly as expected.

CLOCK PHASE NOISE: Another name for clock jitter is “phase noise”. The clock for digital audio equipment has to be both accurate in frequency (otherwise the audio will play fast or slow) and have low jitter (to avoid significant sampling error). The lower the jitter the more expensive the clock circuitry becomes. All clocks have some inherent jitter even if they’re operated in a perfect isolated environment, from a perfect power supply, etc. And when you put them in a less than perfect environment—typical consumer electronics—the jitter will usually get worse because there’s noise on the power supply, ground noise, and likely some EMI (electromagnetic noise) from other parts of the circuitry. Just changing the PC board layout can have a significant impact on jitter performance even using the exact same parts.

JITTER REDUCTION: Some audiophile manufactures making jitter reduction claims are likely just touting the features built into whatever chip(s) they’re using and going off the datasheet for the part(s). Some, I know for a fact, don’t even have equipment to even properly measure jitter. So it’s not surprising some of the products from these manufactures have lousy jitter performance despite the high-end parts used, and their marketing claims. You can’t take jitter performance for granted, design only by ear, etc. You have to measure it.

JITTER REDUCTION METHODS: Various methods are used for jitter reduction. Some use a PLL, a double PLL, Asynchronous Sample Rate Conversion (ASRC) techniques (as Benchmark does), and now some USB products use a different form of the USB Audio interface specification known as Asynchronous. Each company will often argue their way is the best way. But, in reality, each has its own strengths and weaknesses. The best way I know to compare them is to evaluate the jitter spectrum using a J-Test.

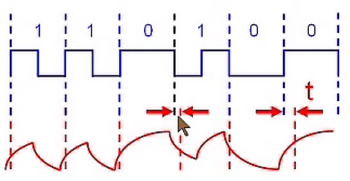

CABLE JITTER: Cables can “smear” digital signals by attenuating the highest frequencies. The diagram to the right is an example. The top blue waveform illustrates a perfect digital S/PDIF or AES3 bitstream. The bottom red waveform is what you might get out the other end of a long cable. The hardware receiving the signal uses the “zero crossings”—where the signal transitions an imaginary line drawn horizontally through the middle of the red waveform—to extract the clock. As you can see by the red arrows, that isn’t always correct due to the waveform distortion. The gaps between the red arrows are jitter. The amount of “smearing” depends on the bitstream itself so as the audio signal changes so does the clock timing creating jitter related to the audio itself.

CABLE JITTER: Cables can “smear” digital signals by attenuating the highest frequencies. The diagram to the right is an example. The top blue waveform illustrates a perfect digital S/PDIF or AES3 bitstream. The bottom red waveform is what you might get out the other end of a long cable. The hardware receiving the signal uses the “zero crossings”—where the signal transitions an imaginary line drawn horizontally through the middle of the red waveform—to extract the clock. As you can see by the red arrows, that isn’t always correct due to the waveform distortion. The gaps between the red arrows are jitter. The amount of “smearing” depends on the bitstream itself so as the audio signal changes so does the clock timing creating jitter related to the audio itself.

RECORDING JITTER IS A MORE SERIOUS PROBLEM: If the device doing the A/D conversion during recording has a poor clock, or there are other clocking issues (such as a multi-track with multiple A/D devices recording simultaneously), the music will not be reliably sampled with low jitter. And, contrary to what many manufactures and the audiophile media would like you to believe, you cannot correct for recorded jitter during playback. Even those uber-expensive "jitter correction" devices like the Genesis Digital Lens can't fix recorded jitter. This is partly why I invested nearly $2000 in the Benchmark ADC1. I wanted an A/D that had reference quality jitter performance as you're stuck with whatever the A/D hardware gives you.

FURTHER INFORMATION: Here are 3 links including Benchmark's excellent description of jitter and how they deal with both measuring it and eliminating it, a generic description of jitter in general (not just audio jitter) on Wikipedia, and a rather wordy and technical PDF of an Audio Critic issue which had Jitter as their feature article:

Jitter Effects & Measurement (by John Siau, Benchmark Media)

Sound on Sound Benchmark Review With Jitter Discussion (an independent verification of Benchmark’s method)

Audio Critic Jitter Issue (pdf)

MEASURING JITTER FROM AN ANALOG SIGNAL: There are various ways to measure or evaluate jitter depending on what you're testing. If you're forced to test in the analog domain (i.e. gear with only an analog output), you generally use a particular FFT spectrum analysis of certain test signals and visually interpret the results. The question is what signal to use?

J-TEST: Julian Dunn at Prism Sound (makers of the dScope) developed the Jtest as a way to provoke “worst case” jitter for the purposes of measurements. His work was published by the Audio Engineering Society and has become something of a benchmark for Jitter measurements. The Jtest signal creates a jitter “torture test” at exactly 1/4 the sampling rate combined with toggling the lowest bit in a way that exposes jitter. I use the same Jtest signal with a similar spectrum analysis and my results should compare well those in Stereophile and elsewhere.

SIDEBANDS: As mentioned earlier, periodic higher frequency jitter is revealed as symmetrical pairs of “sidebands” around the main signal—this is analogous to the old mechanically induced flutter. The “height” or peak level of these sidebands indicates the relative amplitude (in picoseconds or nanoseconds) of the jitter components. While “spread” at the base of the main 11,205 hz signal indicates random low frequency jitter—this is analogous to the old mechanically induced wow. The greater the spread, and higher in amplitude it reaches, the greater the low frequency jitter amplitude.

MEASUREMENT VALIDATION: Variations of this method has been well documented and verified by many. It relies on the fact that nearby symmetrical sidebands to the high frequency signal are not likely to be from anything but jitter. The difference in frequency is much less than a single sample period of the digital signal. So “distortion” components this close, in matched pairs, are extremely likely to be jitter related. Here's a description that's less technical than most and also serves to help validate the Benchmark link above: Measuring Jitter

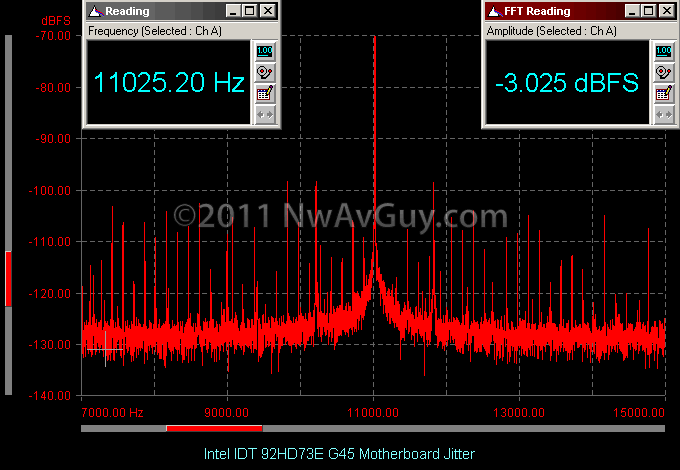

PC SOUND OUTPUT: I've used the above technique and can say it works. Some devices have minimal jitter, and some have fairly obvious jitter. Here, for example, is the Jtest jitter spectrum of a typical PC’s audio output. The high amount of noise on the motherboard likely corrupts the digital audio clock and/or bitstreams creating more sources for high frequency periodic jitter than usual. There’s also significant “spread” indicating low frequency jitter:

M-AUDIO TRANSIT USB INTERFACE: The M-Audio shows much more “spread” all the way up to about –88 dB and fewer sidebands slightly lower in level than above:

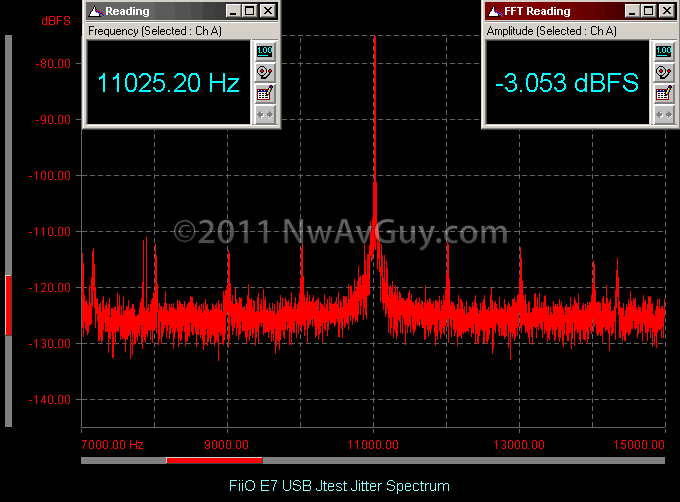

FiiO E7 USB DAC: This is an improvement over both of the above showing less spread and a rather different distribution of jitter sidebands:

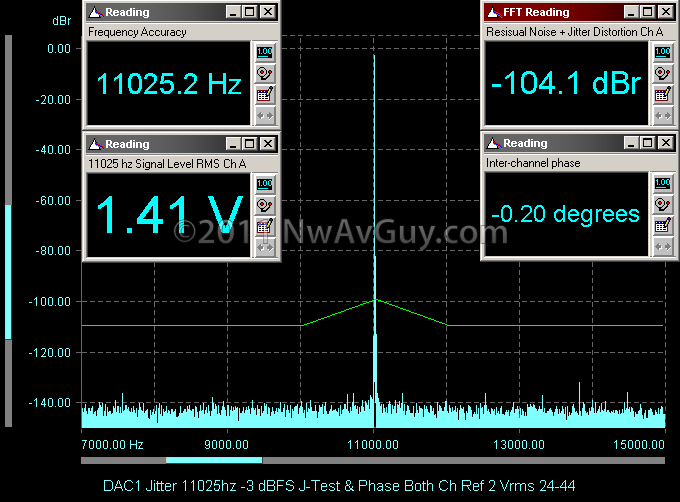

SO HOW MUCH IS TOO MUCH? Based on all the research I’ve done, if the total sum of all the jitter components is less than –100 dBFS within the audio band, the jitter will be inaudible. I’ve further tried to clarify this by developing a “limit guide line” with individual components being under –110 dBFS with a bit more leniency close to the fundamental test signal but still under the –100 dBFS limit. Here’s an example of the DAC1 with the limit line shown in green. You can see the $1600 DAC performs very well on jitter:

MEASURING A DIGITAL SIGNAL: In the digital domain, if you're say looking at a digital signal coming out of an A/D converter, CD/DVD/Media player with a digital output, etc. you can directly measure the jitter with the right instrument. The Prism Sound dScope has a low jitter internal time base clock. This allows the dScope to compare the clock in the digital signal to its own quality time base. From the timing differences it can calculate an actual jitter value in the time domain.

OSCILLOSCOPE MEASUREMENTS: Some think if you look at a digital signal on a scope with an infinite persistence feature the “smear” you get is jitter. But it’s not. What you’re really seeing is variations in the scope’s trigger point. Because the scope is entirely triggering on the incoming waveform, it’s automatically trying to filter out any jitter. The only way to measure jitter is to compare the signal in question to a known highly stable source. And scopes are not, by themselves, generally set up to do that.

SUMMARY: My thoughts on jitter are pretty simple:

- It doesn't matter how a digital audio file is stored or if lossless compression is used. A file played back on a PC, network file server, portable flash-based player, WAVE, FLAC, etc. are all the same with respect to jitter at the file level. It's what happens later in the playback process that matters.

- Many devices exist which effectively reduce playback induced jitter to very likely inaudible levels. If you're worried about jitter it's easy enough to choose one of these devices--especially ones that back up their marketing claims with some actual valid test results (like Benchmark, Anedio, etc.).

- If you’re worried about jitter, choose a device that has been independently tested for jitter with similar jitter spectrums to those shown in this article. Look for sidebands and “spread” under –110 dBFS.

- Jitter present on recordings, from the A/D process, is a more serious problem and cannot be removed by any playback hardware.

WAV – FLAC

ReplyDeleteI don’t think the claim is a difference in bits.

This has been tested so often that we can safely assume that FLAC is lossless indeed.

The claim is that there is more CPU needed to convert FLAC to raw PCM.

This additional electrical activity might disturb the clock driving the DA conversion.

If there is a difference it is a difference in jitter level.

Would be very interesting if you can measure this phenomenon

http://thewelltemperedcomputer.com/KB/WAV-FLAC.htm

Kind regards

Vincent Kars

Vincent, you bring up a good point. And thanks for posting the interesting link. I was aware of your site and it has lots of good info. I've added a link to this article and also to your sound quality page in my main links to the right.

ReplyDeleteI've also revised the article above to include my thoughts on this issue. The short answer is I don't believe CPU loading makes any difference. Thanks again.

Great blog! As a fellow engineer I appreciate your approach of backing up claims with quantitative facts.

ReplyDeleteAnother thing that seems to be in hot debate right now is what isochronous vs async USB interfaces do to jitter, especially as affordable async usb/spdif converters are being introduced. Seems there have been 'distractors' of both Halide and HiFace already. Then Musical Fidelity which has a long established reputation enters the fray. It'd be interesting if you measure one of these things.

Thanks for the encouragement. There are some interesting debates about async, etc. Did you read the Benchmark "paper" I linked in this article? I tend to side with Benchmark's view of what matters and how to best measure it. There are plenty of manufactures claiming "100X" reductions in jitter, etc., but relative to what? Measured how?

ReplyDeleteMy personal view is jitter is a lot like THD. Once it's below a certain level it's safe to assume it's inaudible. The catch is it's harder to quantify jitter--especially in the analog domain such as the output of a DAC. So it's open to debate as to what's "low enough" and what measurement or criteria should be used.

And I believe, like so many other things in the audiophile world, much of what we read about jitter is pure marketing hype, audiophile myth, psychological bias (i.e. imagined differences), or genuine audible differences related to something else. I don't believe jitter is an audible problem in reasonably well designed products.

I make such claims because listening tests tend to support my view. For example, an old inexpensive Sony CD player sounded the same as an expensive Wadia CD transport/DAC in the Matrix Audio blind test (see link in the right hand column). Likewise, there are published AES papers of listening trials that demonstrate running SACD high resolution audio through a 16/44 A/D/A process doesn't create any audible differences under real world conditions (i.e. Meyer/Moran).

If jitter were typically audible, you would expect it to show up in blind listening tests. But, to my knowledge, only artificially high amounts of jitter have been shown to be readily audible. But, as is often the case with audiophile gear, it can provide some piece of mind if you know a particular piece of gear has relatively low jitter.

So I'll keep making jitter measurements and people can draw their own conclusions from the results. But, ultimately, it would be interesting to conduct a well run blind listening test of a high jitter DAC and a low jitter DAC that otherwise measured similar. If such a test has been done, I'm not aware of it.

Thank you for your interesting explanations.

ReplyDeleteBut isn't it an issue with measuring the jitter that the jitter of the device being measured and the measuring device are overlaying? As you said there is no perfect clock. So if you are measuring an analog signal and performing FFT on it this will only give you reliable results if you have a perfect clock.

To Anonymous above, if you mean performing an analog FFT spectrum analysis as I've shown, no they don't share the same clock. Only professional studio equipment with a clock output would allow such a test. The FFT analysis uses windowing so it can operate asynchronously. Please see the Benchmark and Anedio links I provided in the article. Both links discuss the method I use further and it's generally considered reliable. The results are 100% repeatable.

ReplyDeleteGood discussion of some of the issues relating jitter, a.k.a. phase noise. Do you have a source for a 24 JTest .wav file? It looks like your DAC1 Pre chart is done with a 16 bit Jtest. We've got an APx515 in-house, and it's quite instructive to put it on the output of various DACs. We also look at phase noise of clocks, which gives one a more generalized characterization of issues that will give rise to jitter, and they operate somewhat independently. For example, one could have a low jitter clock and interface (USB, whatever) but the design could still be sensitive to data jitter, as stimulated by JTest, it will show poor performance.

ReplyDeleteThe JTest is thus a jitter "stimulator", so to speak, and I think is really designed to "show off" data-pattern related jitter. There are many other types, unfortunately.

Your observations certainly get the conversation going, and once audio enthusiasts start using low-jitter interfaces and DACs, then the fun begins!

Prism Sound, the makers of the dScope, as you may know helped invent the Jtest. The dScope software includes Jtest files in a proprietary format that are "played" by the dScope's digital signal generator in 16 or 24 bit. And, yes,it's designed to expose pattern-related jitter. There's an AES paper on the topic.

ReplyDeleteI agree to have low final jitter you need to both recover the clock with low jitter, and also have a local clock with low jitter (phase noise). It's not, however, typically practical to measure the phase noise of internal clocks directly except perhaps during R&D of a new product.

I think the best solution, and the only one I know of that works across a broad range of digital audio devices, is the spectral analysis techniques documented in this article and elsewhere. Using the Jtest pattern to "provoke" the hardware does make a difference with some devices compared to a regular unmodified sine wave of the same frequency.

I still haven't seen a good case for any jitter-rejection mechanism more complex than your garden-variety PLL. Not saying that ASRC isn't a neat solution, but it's not strictly necessary to top an adequate solution which was already universal in the 80s.

ReplyDeleteYeah, you'll get almost as many opinions on jitter as people you ask. I don't know who to believe. I can say (as can be seen in the graphs above) Benchmark's ASRC implementation does an impressive job on the 11025 hz widely accepted Jtest. How much of that advantage is audible is much harder to say. And obviously I'm not comparing the Benchmark to high-end PLL designs but it would be interesting to do so.

ReplyDeleteThe sad thing to me is people are largely going backwards these days with "multi box" solutions such as PC -> USB -> S/PDIF -> DAC -> Headphone amp. That's just inviting jitter problems, level issues, noise issues, etc. compared to one engineer designing it all into one box with a master clock, precise gain matching, etc.

I don't know of any DAC-and-headphone-amp-in-a-box DIY or commercial designs that are reasonably priced... Sure, the Anedio and Benchmark DACs look great, but I certainly can't afford them... multi box DIY projects are financially feasable to me, however. Like an O2 combined with AMB's Gamma things. Too bad AMB doesn't have any proper measurements... By the way, how would one go about reducing jitter risks in a multi box setting? Keep the cables short and pray that the individual boxes don't do stupid things?

ReplyDeleteKeeping cables short might help, but a much bigger problem is the phase noise of the various clocks used and other hardware issues in a multi-box solution. I'm still looking for a low cost solution. The Centrance DACport and DACmini are not cheap, but they're 1/4 to 1/2 the cost of a Benchmark, Grace, etc. and they do true 24/96 over USB without needing special drivers. I'm sure even the DACport will put the AMB gamma (which can only do 16/48 over USB) to shame.

ReplyDeleteThe DACport has a 10 ohm output impedance which is something to keep in mind if you use BA IEMS.

ReplyDeleteGood point Maverick. I was speaking more of the DACport as a pure DAC, not it's headphone output. Although for many non-BA headphones it's probably acceptable. The DACmini can be ordered with "zero ohm" output impedance.

ReplyDeleteHmm...I didn't know they started doing that.

ReplyDeleteIf you're just using it as a DAC I think the DACport LX is supposed be designed for that with a line output instead of a headamp. I don't know anything else about it though.

First let me thank you for your wonderful blog! I found it a few days ago and have done nothing ever since but reading the articles, comments and references you have provided (some even multiple times since I'm not good at electrical engineering and it takes a while for certain things to make sense).

ReplyDeleteI think it's a shame that with audio gear one has such a hard time finding actual numbers on how it performs. Seeing how much trouble you go through to provide accurate results, I can somewhat understand why manufacturers don't bother though.

But when I read that your $2000 Benchmark DAC1 is about twice as expensive compared to professional hardware used in recording studios and that most manufacturers of amps and other gear often don't know the actual performance of their products, I realized that maybe there really isn't that much difference between a $50 and a $5000 product.

So again, thank you for saving me and others from wasting money on hardware that delivers little more than a placebo-effect!

My question for this article is why jitter in a digital signal matters. Isn't the idea of a digital signal that it either works (if the jitter caused by the cable, EMI, etc. is within paramters) or it doesn't (if the distortion is too big)?

Wouldn't a flipped bit in a S/PDIF or USB signal result in a checksum error causing the packet containing the faulty data to be either retransmitted or playback to entirely fail if the link-quality is too low to provide sufficient bandwith?

Hi! A good measuring technic for jitter is the eye pattern or diagram.

ReplyDeletehttp://en.wikipedia.org/wiki/Eye_pattern

If you have a good scope, and can access the clock signals it is simple to measure it, try it out!

Glad you liked the article and blog. The issue here isn't a flipped bit, it's the time between samples compared to when the recording was made versus how it's played back. If the samples shift in time it creates a form of distortion especially at high frequencies.

ReplyDeleteI think you didn't get the idea of the eye pattern. You set a fast scope to the crossing point (of the clock or the spdif or any digital data) and set the scope to maintain and show multiple crossings. I think it is called "hold data" so, you got one reference point, and multiple other crossings. You can can see the jitter just loking at the diagram, you measure accuraty the shortest time and the longest time, and compare it to the nominal, and say: "this system has x% or y pS jitter". It is one of the best way to measure jitter.

ReplyDeletemore here:

http://focus.ti.com/general/docs/lit/getliterature.tsp?baseLiteratureNumber=SLYT179&track=no

(trust me, i'm also an elecrical engineer:), but sorry for my english)

USB is packet-based. Buffering is unavoidable, and i believe it has to have about 1ms of buffer for minimal operation.

ReplyDeleteIt's a matter of reconstructing a well-timed stream from the buffer and feeding it to a low-jitter DAC. Attempts to remove jitter by inventing some new way to transfer data over USB are either sheer incompetency or a blatant lie. I suspect basic electric and RF horribleness in the typical failure of USB soundcards to provide jitter-free sound.

When going non-isochronous though, it may be possible to eliminate occasional dropouts, at the cost of occasional latency problems which need custom management on hardware designer's end.

GuiltySpark: i don't think S/PDIF has checksums at all, though i'm not at all knowledgeable about that. And timing information being embedded into each bit of signal can be a problem too.

USB has checksums, but Isochronous/HID Audio data will not be re-sent. So the endpoint has basically two choices - either try to play a SLIGHTLY faulty bitstream, or drop a complete packet causing audio disruption. I don't seriously think this could be a problem to have a bit flipped once a week or so. USB data is clocked at fixed rate. DAC Timing can be either independently fixed on the endpoint and instruct the host to vary the amount of data transferred each frame (frame = 1ms of host clock in USB-speak), or the USB endpoint can try to gradually tune DAC clock to the frequency of frames, maintaining same amount of data per frame. In either case, the data is burst at a fixed rate, and one or another amount of data merely means various of unused leftover time each frame.

@Siana Gearz, Thanks for your comments. I agree with them. Indeed S/PDIF itself does not have error checking. USB does, but only in certain modes.

ReplyDeleteI don't believe most USB interface chips used in DACs are capable of buffering enough audio to allow re-transmitting a bad packet. As Siana suggests, either the audio is played with the error, or the entire packet is discarded creating a dropout.

@Tszaboo, I agree about eye patterns. The dScope can automatically display and analyze them for S/PDIF and AES digital audio signals as can my high speed scopes. But, in the case of USB audio, it doesn't do much good to look at the USB signal lines. If you probe inside the DAC it's usually possible to look at the I2S signals. But that's still of limited usefulness because...

Ultimately what matters most is the analog output of the DAC. And the DAC chip itself usually has some degree of jitter rejection. Even if there's significant jitter on the I2S signal/clock into the DAC--from USB or S/PDIF--that doesn't predict the audio performance. So I think the J-Test approach which is used by the dScope, Audio Precision, Miller jitter analyzer, etc. is still the best overall option for measurement.

Pure audio playback can easily be clocked with a crystal oscillator right at the DAC chip, which is for practical purposes jitter free. This applies both to a computer and to a CD transport. One example I know is the HRT Music Streamer II with its asynchrounous USB mode.

ReplyDeleteWhenever there is clock recovery, which invariably takes place with some form of PLL, there is clock jitter. If the PLL is good, the jitter is rejected sufficiently so that there is no audio degradation.

Jitter is the only reason that I could imagine that different cables produce different sound. A bad cable could conceivably degrade the signal, so that the quality of the clock recovery becomes more or less slightly compromised. If for example the signal were attenuated a lot, the signal to noise ratio in the receiver, be it electrical or optical, would decrease and the phase detection would pick up noise. Imprecise phase detection leads to Imprecise control signals for the PLLs frequency.

Whether jitter is a problem or not with a particular setup is an entirely different question however. It should be measurable, but I would be wary if anyone claimed good or bad audio quality caused by good or bad jitter rejection based on a listening test alone.

There are many instances where clock recovery is needed, for example in television broadcasting, where everything is synced to a house clock. Or a studio full of outboard gear.

Even a simple video playback requires some form of audio to video synchronization to guarantee lip sync. If for this purpose, a sample rate converter is used, a very exact phase detection is needed because phase errors result in noise on the output of the sample rate converter.

I've dealt with audio to video synchronization in the past when I designed broadcast video transmission equipment.

My nickname for the purpose of this discussion: EE1000.

It's very important to distinguish data integrity and jitter. Data integrity means that sample for sample, the same data arrives at the DAC that is contained on the storage media.

ReplyDeleteJitter means that the ADC's or DAC's clock is frequency modulated. Or, that a sample rate conversion is based on erroneous phase detection.

It amazes me that data integrity is even an issue. How hard can it be to move and buffer a few million bits per second reliably over a few meters of cable? Well it isn't, other than computer driver development maybe. I've experienced tons of computer audio gear that produced clicks and dropouts at least at some sample rates and bit depths. Call me arrogant, but I think software guys just don't know much about robust buffer management. In many instances, data loss could be detected and reported to the user in software or firmware simply by detecting buffer underruns or overruns. But nearly no one bothers to do that, which is a shame.

Clicks and droupouts can be much more easily heard with a 1 kHz sine tone rather than with music. Usually, if no clicks and dropouts occur for several minutes, the setup is OK in that respect.

At any rate, you can't mix data integrity and jitter in the same discussion. Because once data integrity is lost, there are clicks and dropouts and any hope for clean audio is lost.

EE10000

Thanks for your comments. I'm mostly in agreement but it's worth pointing out nearly all USB DACs, especially reasonably priced ones, use adaptive mode and are not genuinely asynchronous. I've read/heard mixed things about HRT's async using the TI TAS1020B and I have not personally tested one. I know TI is discontinuing that chip in favor of newer designs. But the MS2 seems like a fair amount of DAC for the money--especially given the async capability.

ReplyDeleteIn my opinion, the best overall single jitter test is still the J-Test in the analog domain as it takes into account most (or perhaps even all) sources of jitter, over any interface (including USB), and even works on playback-only devices like an iPod.

Cables not only attenuate and add noise but also selectively attenuate high frequencies more slowing the rise time of the digital data and rounding the "corners". That makes the edge detection more "sloppy" which is a prime source of jitter.

Jitter, in general, is a gray area or especially "squishy" subject when you start trying to correlate it with sound quality. On the one hand some audiophiles love "squishy" as it gives them a handy explanation for why, according to some, most digital sources sound different from each other. But, on the other hand, even guys like John Atkinson who have run jitter tests on probably hundreds of devices, often throw up their arms when asked "what does the result mean in terms of sound quality?".

Blind testing presents an interesting viewpoint. While some sources do indeed sound different, many of the subjective differences attributed to jitter (and/or other difficult to correlate parameters) end up disappearing if you simply throw a bed sheet over the equipment being auditioned without changing anything else. That's yet another reason nearly all subjective audiophiles claiming to hear such differences avoid any sort of blind test like the plague. See: Subjective vs Objective

Comparing DACs is very hard. A possibility to switch between them while playing the same sound is required and their levels need to be matches do 0.1 dB or better, which is 1 percent in Voltage, well within the accuracy of a regular digital multimeter.

ReplyDeleteIdeally, there would be blind testing with ABX hardware. I considered making one, but there are just so many other things to do. Like hunting for good music to listen to. Going to a club dancing.

I have a Music Streamer II, the basic version. It seems fine, high output level, no noise, clean, no loss in bass definition that some cheap DACs seem to have.

There are no fantastic DACs. DACs can never be fantastic or whatever language some people use, the best they can be is transparent. If two DACs that are considered very good, are indistinguishable in blind ABX testing, then they are both transparent. If another good DAC were to sound different, there would be a tricky job to ascertain which one is better, and the better one should be the new reference for transparency.

Sorry for my ramblings.

EE10000

I'm the guy that suggested that you review the Native Instruments Traktor Audio 2. USB DJ soundcard with supposedly 8 Ohm cabable headphone outputs. I wanna know if I sit on the right horse :-).

If it helps, I'll buy you one.

About high frequency roll off through cables. Don't modern data interfaces use sinusoidal waveforms? The spectrum of the signal should ideally be bandwidth limited both at the top and the bottom. So that even cheap cables won't affect the edge detection.

ReplyDeleteAnalog audio does not use matched

impedance cabling. The cable is just a capacitor. With increased cable length, the high frequencies get attenuated.

But I think SPDIF does. And USB as well, with twisted pairs. Matched impedance allows for much better high frequency response. At first approximation, with increased cable length the signal gets attenuated but the waveform stays the same.

SPDIF is probably not modern in that respect.

But I'm guessing USB 2 is and PCI-E is.

Just trying to say, if I were to design a new data transmission standard, it would not rely on rectangular waveforms.

EE10000

No, the interfaces in question do not use sine waves although the signal at the other end can sometimes resemble one after having gone through the cable version of a low-pass filter. The outputs of the chips that drive say a USB bus can be described as "controlled rise time rectangular waves". The rise times are slowed to ease power consumption and reduce RFI/EMI for FCC and CE reasons. USB 1.X (12 Mbit) is not terminated while USB 2.0 (480 Mbit) is terminated. Coaxial S/PDIF and I2S are also squarish wave shapes.

ReplyDeleteI have a full ABX box and it's been a very useful device. Blind testing, when the differences are subtle, requires a fair amount of effort, ideally lots of trials, and multiple people. You can also do a lot solo with Foobar and the ABX add on. Someday I plan to do an article on various files available around the web that can be compared blind with Foobar. Some are really eye opening. The Audio DiffMaker project is also both useful, fun and has opened some eyes.

I try to mostly review products that, for now, have relatively broad appeal to the audiophile (or at least "high quality) headphone crowd. I'm afraid DJ gear, like the Traktor, doesn't really fit the mold. I get many requests every week to review everything from vintage stereo receivers to $2000 DACs. But many seem not to realize I'm doing all this on my own time, on my own nickel, with zero revenue. It's multiple days of work to fully test, document, write up, and worst of all, edit a product review for my blog. And they still have lots of typos and formatting errors. ;)

Thank you for the insight on the signal waveforms. I did realize that you do this on your own time and money and I had a vague idea of the amount of time involved. About the Traktor, it's a low cost USB soundcard with built in low impedance headphone amp and volume control. I think it's worthy for your platform but I respect your choice. Thanks so much again. EE10000

ReplyDeleteI have a feeling the less expensive FiiO E10 will easily beat the Traktor 2 as a headphone DAC but that's just a hunch. NI obviously spent most of their budget on other things (like the giant control surface, etc.). Also, I've yet to measure any piece of semi-pro or pro sound gear that had a headphone output impedance under 10 ohms. Most are 22 or 47 ohms. Even Asus can't manage to do low impedance headphone outputs with their high-end Xonar line.

ReplyDeleteYou're exactly right.

ReplyDeleteI ran a 1 kHz sine wave into my Traktor Audio 2 and got 2.847 V open and 2.331 V into 470 Ohms, and the resulting output resistance is 105 Ohms. This is obviously disappointing. Their specs recommend phones with 8 Ohms and up, go figure.

My Denon AH-D2000 headphones DC resistance is 23 Ohms.

Off my Behringer Xenix 502 mixer, I measured an output resistance of 18.6 Ohms on the headphone output. So I uses this mixer as a headphone amplifier for now.

EE10000

So much for "low impedance headphone output"! Welcome to the world of pro gear headphone jacks :(

ReplyDeleteYour mixer breaks Behringer tradition if it's really around 19 ohms. Even their headphone amps are 47 ohms (right in the datasheets) as is the UCA202 and other Behringer gear I've checked. But even 19 ohms still means only headphones around 150 ohms or greater.

I have the AH-D2000. Their AC impedance is 25 ohms and they need 25/8 or a 3 ohm or less output impedance to sound their best.

Do you, by any chance, have any recording similar to that you've listened on a conference? Indicating how jitter sounds?

ReplyDeleteAlso, is it possible to assess jitter level without any equipment (I'm not saying "measure", obviously, but assess if jitter exceeds the audibility threshold or not)?

@Alexium, I don't have such recordings but I've thought about contacting the conference presenter and seeing if he would share a couple excerpts of what he played for us. If I come across something I'll do a quick article on the subject. I agree it would be useful for people to hear.

ReplyDeleteI'm not aware of a way to assess jitter by itself without using something like the J-test and a suitable spectrum analysis. In general, however, a proper level-matched blind A/B or ABX testing is very useful. If you compare two DACs/digital sources, and cannot hear any difference, it's safe to assume any differences in their jitter performance are not generally audible or significant. If you're specifically concerned about jitter, well recorded piano music is especially revealing.

If you do hear a difference in a blind A/B test, it's hard to know if it's attributable to one having much higher jitter or some other audible problem. You would need to measure both sources on a proper audio analyzer and hopefully the results would help reveal what's most likely causing the difference.

http://www.diymobileaudio.com/forum/how-articles-provided-our-members/78465-mathematics-jitter.html

ReplyDeleteAbove is a thread from Jeff (AKA Werewolf, Lycan). He used to be VP at Cystal and helped design one of the first multibit DAC chips (IIRC). The thread explains jitter and further into the thread there was an attempt to create a clean test file and add jitter to it, don't think it ever was completed but it might be a good place to start looking into seeing jitter's audibility.

I think I may have finally understood what jitter is .. after all these months.

ReplyDeleteThe problem is the DAC is relying on the "carrier signal" of S/PDIF (or USB) to drive its DAC-chip, rather having its own dedicated clock for that purpose - right? Or did I get it wrong? It would at least explain why jitter looks so weird and pattern-less (on charts), since a DAC running at the wrong, or a "variable", sampling frequency would produce all sorts of funny results - right?

Of course, if this is the case, then the question arises why anyone would ever want to do this. The carrier signal is there to ensure proper transport of the data, not to transmit a clock signal. Isn't it the norm in digital transmissions that communication interfaces have their own separated clocks?

I understand that audio is somewhat latency-critial, but a minimal buffer of 2 or 3 msecs worth of data would be hardly noticeable by anyone - and would allow for a fully local clock (at the propper sampling frequency) do drive the digital to analog process. Or are accurate clock generators that expensive? (Though I can't really imagine that, aren't they used in pretty much everything by now?)

And it also leaves open the question of why anyone would use the "adaptive" usb transfer mode (like in the ODAC), or something as complex/expensive like ASRC (like in the DAC1) when a simple buffer could solve this issue for good.

You're welcome and you essentially have it figured out except for a few pieces. If you think about it, a USB or S/PDIF DAC doesn't usually have any control over the source. USB and S/PDIF are like a water hose pouring out water and the DAC can't control the flow. Under those circumstances you can't use a buffer in the normal way. If you slow down the music even slightly (lower the pitch) your buffer will eventually overflow. And if you play the music even a tiny bit too fast, the buffer will run dry. So the DAC, ultimately, is at the mercy of the source.

DeleteSo even if you use a buffer, there can still be timing fluctuations as it tries to adjust the rate the data is clocked out at to match the incoming data rate. This is what PLLs essentially do and they are used for jitter reduction with varying degrees of success. But they're also ultimately a compromise--often between trading high frequency (i.e. sample to sample) jitter for much lower frequency jitter (related to keeping the buffer at about 50%--sort of like how a car on cruise control will slowly cycle between being slightly below and above the set speed).

HF jitter typically causes the side band "spikes" seen in this article, while LF jitter makes the base of the 11025hz signal "spread".

The magic, and complexity, of asynchronous USB is the DAC really can have control of the source. Under those conditions it's possible to clock the data out at a stable fixed rate as the DAC can let the incoming rate fluctuate instead. But it is typically expensive, complex, and much more involved. It's also usually not necessary to get jitter to well below audible levels. The PLL techniques above, when implemented properly, are plenty effective.

In the digital world clock are sometimes embedded with the data. A good example is asynchronous serial (i.e. RS232). But with those clocks even serious jitter rarely matters. S/PDIF was originally developed mainly for consumer DAT (digital tape) and miniDisc recorders. Back then they had bigger things to worry about than some jitter. High end studio gear, like my Benchmark ADC1 and Motu, allows for independent clock lines. That not only removes the need to embed and extract the clock, but it allows using a single master clock for several different pieces of hardware.

I hope that helps!

Thank you for your confirmation.

DeleteMaybe I'm underestimating the issue and the amounts of jitter involed, but I thought the clock averaged out to the specified frequency over time? So for a 44.1k signal you have ~88 samples in a 2ms buffer and can tollerate 87 samples of jitter (i.e. the total available buffer space would be 4ms). Since the clock of the carrier signal will have to be higher, or at least equal, to the sampling rate, you can compensate for 87 full clock-ticks of jitter - that is if the jitter ever gets close to these levels, then the transmission will fail or result in corrupted data.

Meanwhile the DAC processes the buffered-up samples at the ideal sampling rate, driven by it's own dedicated (internal) clock.

The only issue here is if the sending clock would continously keep drifting in one direction or wouldn't average out before the cummulated amount of jitter could get higher than the buffered up 2 ms. Are these realistic scenarios?

My other question is how an external clock signal (when not generated by a master clock) would help here. Wouldn't that be subject to the same issue of "smearing", unless you used higher-quality interfaces/cables/etc.? I have read in other places too that if S/PDIF didn't have the clock signal embedded, but sent over an additional cable, jitter would be less of an issue, but I don't really see why.

And one final question, since jitter does change the reconstructed analog signal of the DAC (that link to diymobileaudio said it essentially modulates it), shouldn't a jitter test not just check for what has been added to that perfect signal, but also what is missing? That is, shouldn't the "height" (level/loudness) of the 1025Hz-tone in the J-Test be checked too, additionally to just checking how loud the distortions are? You provide a dBFS reading of the level of the test-tone here, but your other articles don't have them in dBFS and other sites like Stereophile don't report them at all. Also Sterephile seems to be using the J-Test signal at -6dBFS rather than at full scale - does that have any effect on the results?

The issue is you can't clock out the data at a fixed rate as you suggest when you can't control the source of the data. The playback clock HAS to adjust itself to the incoming data rate. No watch keeps perfect time forever because no crystal is perfectly accurate. The in and out rates have to match or the buffer will always eventually overflow or run dry. So the output clock, by definition, has to be variable in frequency which is itself a potential form of jitter.

DeleteYou second question is a good one. When the clock is embedded the jitter is modulated by the audio data. In fact, that's how the J-Test works to expose jitter. It's using worst case data to expose more jitter. When the clock is independent, as in high-end studio gear, it can't be influenced by the audio data.

The level of the 11025 signal is specified in the J-Test standard. It's designed to not be so high as to create regular distortion products with DACs that have problems with 0 dBFS but be high enough to maximize the dynamic range of the measurement. This might not be intuitive but any unwanted amplitude modulation of the signal will show up as distortion products in the spectrum. So that is covered in the existing measurement.

Just curious about 2 things:

ReplyDeleteAre single bit DACs (bitstream) more sensitive to phase jitter than multibit DACs (R2R)? There was a craze for aftermarket clocks in the 1990s and I could imagine that getting the clock right for a bitstream DAC where the amplitude of the output is critically dependent on the mark/space of the clock could make a difference. Maybe things have improved in the latest DACs.

I accidentally imported all the backup music files from my NAS into media player. This meant that I had all the tracks in each album 2x. I started listening (modified UCA222 and headphones)and I noticed (unexpectedly) that 1 track of each pair sounded slightly woolly. I discovered that the track on the NAS was the woolly one. Could this be a real effect? Maybe jitter due to the access time of the NAS? Do I need a music player with more buffering?

To the first question, I honestly don't know. There are so many variables involved I'm not sure you can say one is more jitter prone than the other.

DeleteI really doubt you're hearing a difference on your NAS tracks with an external DAC. If you use the blind ABX add-on for Foobar you can compare a local track to a NAS track that way. Nearly everything I play is on a NAS including the test tracks I use for instrumented testing. I've never seen any differences in jitter.

NwAvGuy, I'm surprised the AES convention listening test came up with ANY audibility of jitter. IMHO, this would only be audible with appallingly high levels. Do you have any more details?

ReplyDeleteThe presenter did not quantify the levels of jitter we heard, but he did say they were "unusually high" or something to that effect. That said, because jitter is a more dissonant form of distortion, it's reasonable to assume it's generally more audible than mostly harmonic distortion. Still, even if that's the case, it's difficult to imagine jitter being audible if the total sum of jitter components is 80 - 90 dB or more below the signal. I use a threshold around -100 to -110 dBFS for individual jitter components with the signal at -3 dBFS to provide a margin of safety.

DeleteMy newest measurements sum the more significant jitter components to obtain a single number. The ODAC measures -106.5 dBFS (-103.5 dB below the signal) on that test. See: ODAC May Update

My formal Blind Listening Tests on jitter were done in the early 80s. In da old days, it was the LF 'broadening' at the base of the pure tone that was most audible. I've never encountered the distinct sidebands that the J test comes up with.

DeleteHave you measured any DACs where the jitter sum exceeds -90dBFS?

I'm inclined to agree about the LF "spread" being more audible in some cases but I don't really have much objective proof to back that up.

DeleteI haven't run the new dScope summed jitter test on much gear yet. But, judging from the jitter spectrums I've seen in the past, some would be over -90 dBFS. I've seen especially bad jitter from on-board PC audio, an android smartphone, and a few older USB audio devices. I've seen "spread" that reaches up past -80 dBFS.

Would simply doing a loopback with a near FS 11.025kHz sine wave show

ReplyDelete- LF spreading

- distinct HF sidebands?

I'm not hoping for an absolute test but for a cheap 'relative' test.

The hope is that the inevitable delay due to anti-aliasing filters is sufficient to allow random jitter (the LF spreading) to muck up the signal.

I'm not sure if systemic jitter (distinct HF sidebands) would be hidden by the fact that both AD & DA use the same clock in a loopback test.

You concern is correct. The common clock would mask many forms of playback jitter making it an invalid test. And if you did observe jitter components you wouldn't know if they were in the D/A or the A/D.

DeleteFor some things, there's just no substitute for proper audio test equipment. Jitter testing is one of those things. The dScope produces its own very low jitter JTEST signal. And the latest dScope jitter script automatically identifies the symmetrical sidebands and sums them to provide a composite single measurement number. It can also measure the jitter directly on a digital (AES, S/PDIF, TOSLINK) signal as well as produce controlled amounts of jitter to test jitter rejection in playback devices.

But you could play around with using a different source from the device you're testing. RMAA allows that, players like Foobar allow you to select the output device, and good spectrum analyzer software should allow you to specify the input device (or you can make it the default device in the OS). With such a "split" setup you should ideally test a few different playback devices and compare the jitter spectrums to get a feel for what spectral components are from the source (they would not change when changing playback device) and which ones are from the playback device (they would change).

I think you can find downloadable JTEST WAV or FLAC files if you spend some time searching. But, beware, the JTEST is only valid at a specific sampling rate. You can't play back a 44 Khz JTEST file at 96 Khz and expect a valid jitter spectrum. The test signal has to be at 1/4 the sampling rate.

This is an old review, but belated thanks. I almost bought one of these a while back from all the praise, it didn't even occur to me that anything other than Apple would have proprietary usb jacks these days. That alone would be enough for me to write it off, it sounds like it's lacking in many other areas as well.

ReplyDeleteI ended up getting a Sansa Clip, I love some of the options available, but I've been having a real battle syncing music and dealing with RockBox crashes. I miss my old Zune HD brick.